TEQSA recently asked all higher education institutions to reflect “meaningfully on the impact of generative AI” on teaching, learning and assessment practices and develop an institutional action plan. The development of this action plan has provided an extremely valuable opportunity to both reflect on our own journey with generative AI since 2022 and the further steps needed over the next couple of years to ensure our students are prepared for a world where AI is increasingly part of everyday life and work. A link to our response to TEQSA’s request for information can be found at the bottom of this article.

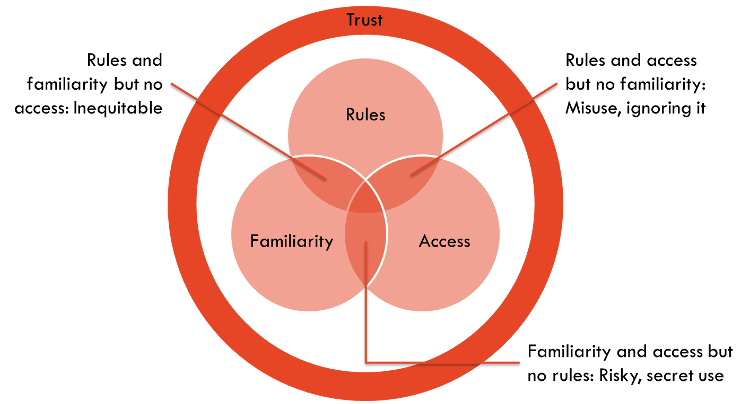

Our work at the University of Sydney in responding to the risks and opportunities presented by generative AI in higher education has centred around four main areas of action: establishing rules, providing access, building familiarity, and fostering trust. From the outset, we have responded carefully but positively to generative AI, electing not to implement bans, or attempt to reject or out-run it, but rather for staff and students to work together to discover and foster productive and responsible ways to engage with it under the direction of its governance bodies.

Establishing rules

Governance

In early 2023, the AI in Education Working Group, comprising staff and student representatives from across the University was formed to discuss implications of generative AI and to share and review practices and policies across faculties. This group reported 3 times to both Academic Board and to Senate on developments in the technologies, coursework assessment and integrity policy, and training. The University Executive then constituted the Generative AI Steering Committee, co-chaired by the DVC (Education) and DVC (Research), with membership including General Counsel, Operations Portfolio, and Information and Communications Technology (ICT) to sets strategic direction. This committee is supported by a Generative AI Coordinating Group, including colleagues from Research, Education, ICT, the Library, student administration, operations, and General Counsel to monitor and review the progress of generative AI adoption.

Guardrails

Our guardrails encourage the University community to learn and experiment with generative AI whilst ensuring that data, privacy, intellectual property, and other information is appropriately protected. The Research Portfolio has established guidelines handling sensitive information, research integrity, research ethics, and how to use generative AI safely for research purposes. Our Academic Integrity Policy was updated in 2022 to explicitly include inappropriate use of AI. Individual unit coordinators have been provided with template wording that can be included in their unit sites about how to phrase appropriate use and acknowledgement of generative AI as part of completing assessments.

Strategy, policy, and principles (including assessment)

The AI in Education Working Group established an AI Strategy Green Paper, which was later adopted by the Steering Committee and moulded into a ‘Dynamic Generative AI Roadmap’. This is grounded in Australia’s AI Ethics Principles and established aspirational positions on generative AI in higher education, including:

- AI has applications in all facets of University work

- Human agency, expertise, and accountability are central

- AI must benefit the University and its community

- We engage productively and responsibly with AI

- Where AI is used, it is transparent and documented

- Our staff and students will model the use of AI

- AI-human collaborations are normalised

A two-lane approach to assessment

Our ‘two-lane approach’ to assessment design has been widely accepted nationally and internationally. It is aligned with the TEQSA guidelines on assessment design in the age of AI. The two-lane approach, summarised in the table below, balances the need to help students engage productively and responsibly with AI, whilst needing to assure attainment of learning outcomes.

| Lane 1 | Lane 2 | |

| Role of assessment | Assessment of learning. | Assessment for and as learning. |

| Level of operation | Mainly at program level. May be must-pass assessment tasks. | Mainly at unit level. |

| Assessment security | Secured, in-person, supervised assessments. | Not secured. |

| Role of generative AI | May or may not be allowed by the examiner. | As relevant, use of AI is supported and scaffolded so that students learn how to productively and responsibly engage with AI. |

| Alignment with TEQSA guidelines | Principle 2 | Principle 1 |

| Examples | In person interactive oral assessments; viva voces; contemporaneous in-class assessments and skill development; tests and exams. | Leveraging AI to provoke reflection, suggest structure, brainstorm ideas, summarise literature, make multimedia content, suggest counterarguments, improve clarity, provide formative feedback, learn authentic uses of technology etc |

AI × Assessment menu

An ‘AI × Assessment menu’ has been developed to support the operationalisation of especially ‘lane 2’ assessments. The menu approach reflects the role of educators in guiding students which (if any) AI tools are beneficial to use for specific tasks, as opposed to relying on unenforceable restriction to these technologies which is both untenable and inauthentic to the modern and future workplace.

Coursework Policy

To align with the two-lane approach, two new assessment principles have been added to the Coursework Policy that emphasise:

- Assessment practices must be integrated into program design

- Assessment practices must develop contemporary capabilities in a trustworthy way

Academic honesty and AI education for students

Our mandatory academic honesty induction module for students was updated in 2024 to reflect these changes. We also provide training workshops, resources for tutorials and the ‘AI in Education‘ website which co-designed with students to broaden understanding in ethical and effective use of AI in learning and assessment.

Using AI for marking and feedback

Guidelines have been developed for how generative AI tools may be used for marking and feedback.

Further work

By January 2025, the University will shift its policy setting to assume the use of AI in unsecured assessment. This means that coordinators will not be able to prohibit its use in such assessment. This will help to increase assessment validity and drive change in assessment design towards a fuller lane 2 approach, whilst also encouraging program directors to design appropriate lane 1 assessments at a program level to assure program learning outcomes.

Based on the policy setting changing, the University is planning to update its curriculum management system in 2026 to accommodate a new configuration of assessment categories and types. This will require staff to adopt assessment designs that are valid and aligned with the two-lane approach, for example by only allowing assessment categories to be classified as ‘secured’ lane 1 assessments that have appropriate security features.

These changes will become part of our internal course review process and the ways in which we benchmark our assessments with other HE providers.

Support for unit and program coordinators

Unit coordinators and program directors are currently reconsidering unit and program learning outcomes and starting to redesign assessments towards either lane 1 or lane 2. Lane 1 assessments are being considered especially at the program level to assure attainment of learning outcomes, in many cases as ‘hurdle’ (must-pass) tasks. This involves a cultural shift for unit coordinators who may have units where many assessments are ‘lane 2’, and they need to rely on other areas of the program to assure attainment of program learning outcomes through lane 1 assessments. In 2024, this work is being funded through targeted grants to assessment leaders in faculties which we aim to widen to all programs for 2025.

Providing equitable access

Access to generative AI tools for students and staff

Equitable access to state-of-the-art generative AI models (like GPT-4) is essential for students and staff to develop familiarity. The University has worked with Microsoft to make Copilot for Web available across the institution for free. Adobe Firefly is also freely available for staff.

Alongside this, the University has developed an in-house generative AI platform called Cogniti which allows instructors to design their own ‘AI agents’. These agents are powered by AI models like GPT-4 and, importantly, allow instructors to steer the behaviour of AI by providing custom instructions and curate the resources available to it, as well as monitor student usage. Cogniti allows individual instructors a self-serve mechanism to make powerful AI available to all their students in a controlled environment to support pedagogy This is allowing new approaches to learning and teaching, such as providing formative feedback, personalised support, and experiential learning.

Further work

The Library in conjunction with the Research Portfolio and Education Portfolio plan to pilot research-specific AI tools, collecting feedback from key stakeholders and considering longer-term investments. Similarly, ICT will work in partnership with the Education Portfolio and faculties to consider discipline-specific AI tools, such as image generation AI for architecture. We will continue to monitor the usage of these tools and the ongoing development of new tools for study, research, and workplace productivity.

Building familiarity

This represents how well students and staff understand and are comfortable with using generative AI for their day-to-day work related to the institution. We have purposely used the word ‘familiarity’ here instead of ‘skill’, as not all users will develop skill with using generative AI. Familiarity also emphasizes an awareness of the broader context of generative AI outside of its use, including ethics, privacy, and safety – including how AI impacts assessment and the implications of, and responses to, this.

Staff

From early 2023, the Education Portfolio has run regular training sessions for staff. These sessions were designed to build familiarity and comfort with generative AI tools, while emphasising the urgency and scale of action needed to respond. These workshops have included ‘Prompt engineering for educators – making generative AI work for you’ and ‘Responding to AI and assessments’ and have recently been consolidated into the ‘Generative AI essentials for educators’ workshop to build awareness of (i) the current state of generative AI, (ii) demystifying generative AI technology, (iii) introducing the two-lane model, assessment menu, and assessment redesign, (iv) demonstrating available AI tools and prompting basics, (v) outlining possible uses of generative AI in education, and (vi) encouraging a ‘possibilities’ mindset.

Teaching@Sydney is home to several critical articles that have been published a collection of articles to help staff understand and respond to generative AI including:

- What teachers and students should know about AI in 2023

- Student-staff forums on generative AI at Sydney

- Prompt engineering for educators – making generative AI work for you

- Ten myths about generative AI in education that are holding us back

- ChatGPT is old news: How do we assess in the age of AI writing co-pilots?

- How can I update assessments to deal with ChatGPT and other generative AI?

- Where are we with generative AI as semester 1, 2024 starts?

- What to do about assessments if we can’t out-design or out-run AI?

Our AI in Education Community of Practice currently has over 270 members. It is helping to build organic networks across the institution to share practice and experiences around teaching and assessing with generative AI. The central and faculty-based educational design teams have been running school- and faculty-wide sessions to introduce academic staff to the realities of generative AI and assessment. These include outlining the current state of AI technology that students have access to, the risks to academic integrity, the implications for learning outcomes and curriculum design, and the need to rethink assessment.

In February 2024, we hosted a sector-wide AI in Education Symposium in February 2024 with 2,000 registrations from Australia and overseas. AI in education is also now a recurring theme in the Sydney Teaching Symposium held in July each year, including this year’s.

Students

A number of resources and workshops have been develop to increase familiarity amongst students, including:

- The AI in Education site, which was co-designed with a team of student partners. The aim of this site is to build familiarity around generative AI, its applications, and the rules around its use in learning and assessment. A link to this site is provided to all students as part of their unit outline documents – see the Learning Support section of an example unit.

- 20-minute activities have been embedded in key first-year ‘transition units’ which introduces commencing students to generative AI. This activity, run by tutors in the context of a particular discipline, introduces students to key generative AI concepts such as its impact, its use, its ethics, academic integrity, and individual perspectives.

- Regular introductory generative AI sessions run by the Library, in conjunction with its peer learning advisors. These cover information and digital literacies around generative AI, how they work, key applications for students, and guardrails for use.

- Workshops on key generative AI-enabled tools for literature searching, structuring writing, and proofing work by the Learning Hub.

Further work

Alongside refreshing and extending these resources for staff and students, work is currently underway to:

- Design an extended drop-in module for first year tutorials that can be adapted to disciplinary contexts. This module is planned to cover what generative AI is and how it works, the ethical and integrity considerations when using it in education, how to use generative AI effectively, practical applications of generative AI tools for learning and assessment, and impacts of generative AI on the discipline, society, and the economy.

- Curate exemplars of good practice around practical generative AI application in teaching and assessment.

- Develop workshops specifically for Honours and Higher Degree by Research students and research supervisors with the Research Portfolio and Library. These will cover academic integrity issues related specifically to the thesis and protection of research data, as well as applications for information literacy.

- Continue regular engagement with students regularly regarding their familiarity with generative AI. This will include through the AI in Education working group, hackathons, and other events and channels where we can privilege the student voice. Students will continue to be involved as partners in the development of our policies for assessment, learning and teaching both informally and through our existing governance structures.

Fostering trust

The three action areas of rules, access, and familiarity are built on a foundation of trust. This trust is triumvirate between students, teachers, and AI, and is based on integrity, honesty, security, transparency, openness, and human agency.

Radical transparency

Our AI guardrails and aspirations highlight the need for open acknowledgement of AI use, by staff and students. As generative AI is still a relatively new concept, overt transparency helps build trust and normalise the use of these tools in day to day work and study.

Secure computing environments

The University’s ICT team works with key vendors and internal stakeholders to establish robust, secure, and private generative AI infrastructure. This elevates trust by providing protected environments within which experimentation can occur safely.

Control by, and visibility for, teachers

The University’s Cogniti AI platform allows teaching staff to steer and control their own ‘AI agents’ and see how students are interacting with them. This enhances the trust that students have in AI tools that are specifically designed to support their learning, and the trust that teachers have in being able to monitor and interpret student-AI interactions.

Response to TEQSA: Artificial Intelligence Request for Information

We have made a public copy of our response to TEQSA available for your information.