In the seven months since ChatGPT was released, the world of generative AI has progressed at an almost impossible pace. Trying to keep up with weekly updates on its impact on education has been like drinking from a firehose. The next big thing on the generative AI front that we need to pay urgent attention to are AI writing co-pilots that will be directly embedded into productivity suites like Microsoft Office. These “co-pilots” are AI-powered assistants designed to assist you to generate content.

The companies behind the productivity tools that most of us and our students use, Microsoft and Google, are getting their versions of AI co-pilots ready for imminent release. Microsoft 365 Copilot and Google Duet AI will embed generative AI directly into software like Microsoft Word and Google Docs. This means that we will all have access to text generation AI right from within the spaces where we write. Notion, a popular app used by many students, already has an AI-powered writing assistant.

Ethan Mollick, a pragmatic and thoughtful educator with AI from the Wharton School in the US, describes this as a “crisis of meaning“:

I don’t see a way that we avoid dealing with this coming storm. So many important processes assume that the amount and quality of our written output is a useful measure of thoughtfulness, effort, and time. If we don’t start thinking about this shift in meaning now… we are likely to face a series of crises of meaning, as centuries-old approaches to filtering and signalling lose all of their value.

So, what happens to assessments now?

Jason Lodge from the University of Queensland and colleagues, and Michael Webb from Jisc UK’s National Centre for AI, have written about the main options regarding assessments. Webb writes that we can either avoid it, try and outrun it, or adapt to AI. All of these responses are legitimate, and all have their short- and long-term benefits and drawbacks. Avoiding it includes reverting to oral or otherwise invigilated exams, which have stronger assessment security but higher costs. And, as assessment experts Liz Johnson, Helen Partridge & Phillip Dawson from Deakin University say, exams can have questionable authenticity. That said, there is certainly a place for secured assessments in order to assure learning outcomes are met – more on this towards the end of this piece.

Outrunning it involves trying to design assessments that AI has more difficulty completing – but the risks are that our redesigns will only be temporarily effective as the pace of AI development accelerates and will make the assessment more inequitable for many of our students. Modifying stimulus (e.g. using images in questions) has been suggested, but GPT-4’s ability to parse images will be released to the public. Modifying the content of assessment is also a popular suggestion, such as connecting with personal events, writing reflections, or linking to class material – but recent work has shown that GPT writes higher-quality reflections than humans, and a larger context window allows students to send it as much relevant class material as needed. Modifying outputs that students produce is also a common response, such as changing assessments to in-class presentations or multimodal outputs – but rapid advances in voice cloning AIs and video and image generation AIs make outrunning AI almost futile. Besides, students could just (and have, to excellent effect) use AI to generate an in-class presentation. This approach also has the potential to exacerbate achievement gaps for those students skilled in the use of AI and those who are not.

Adapting to it means we need to rethink how we assess – as Lodge writes, this is a more effective, longer-term solution, but also much harder. The imminent arrival of AI writing co-pilots makes this even more important – students will have AI asking to be invited into their writing process right from the start, through an innocuous prompt to us humans like “Help me write” (Google) or “Describe what you’d like to write” (Microsoft). With this capability in place within the mainstream productivity tools also makes it inescapable – it will become specious and even meaningless for us to “ban” their use.

“Assess the process”, “use authentic assessment” – but how exactly?

Changing assessments to assess the process and not the product has been a growing retort over the last 6 months in response to generative AI. Lodge puts this elegantly in a recent post:

While generative AI can increasingly reproduce or even surpass human performance in the production of certain artefacts, it cannot replicate the human learning journey, with all its accompanying challenges, discoveries, and moments of insight. It can simulate this journey but not replicate it. The ability to trace this journey, through the assessment of learning processes, ensures the ongoing relevance and integrity of assessment in a way that a focus on outputs cannot. (emphasis added)

Lodge mentions “reflection activities, e-portfolios, nested tasks, peer collaborations, and other approaches” as effective process-based assessments, but acknowledges that these “often do not scale easily or leave too much room for threats to academic integrity”. This is especially the case in the coming era of AI writing co-pilots.

And even before generative AI burst on the scene, there have been calls to recalibrate higher education towards more authentic assessment. Through a systematic review, Verónica Villarroel and colleagues determined there were three main dimensions to authentic assessment:

- Realism (having a realistic task and realistic context)

- Cognitive challenge

- Evaluative judgement (developing students’ ability to judge quality)

Over the years, we’ve collected a few examples of authentic assessment on Teaching@Sydney, and the University of Queensland has a handy searchable assessment database with examples of authentic assessments.

But again, in a time when generative AI is so in-your-face and part of the way we [will] work, what is ‘authentic’, and what is ‘process’ – and how do we actually assess? Are our assessment approaches built to be effective or to be efficient?

Rediscovering what it means to be human (and assessing this)

Going back to first principles, the Higher Education Standards Framework 2021 legislation reminds us that assessments need to assure that learning outcomes have been met, and that the way that we assess needs to be consistent with these learning outcomes. Specifically, Part A section 1.4 clauses 3 and 4 say:

- Methods of assessment are consistent with the learning outcomes being assessed, are capable of confirming that all specified learning outcomes are achieved and that grades awarded reflect the level of student attainment.

- On completion of a course of study, students have demonstrated the learning outcomes specified for the course of study, whether assessed at unit level, course level, or in combination.

There have been calls for a revisit to learning outcomes in the age of AI – the Australian Academic Integrity Network has posted on the TEQSA website that “unit and course learning outcomes, assessment tasks and marking criteria may require review to incorporate the ethical use of generative AI”. Given the close and dynamic interplay between learning outcomes and assessments, the increasing presence of AI in common productivity tools suggests we may need to rethink learning outcomes as well.

What do we want our students to know and be able to do when they leave our units and our courses? At Sydney, we want our graduates to have the skills and knowledge to adapt and thrive in a changing world, improving the wellbeing of our communities and society. But what does this actually mean in practice, in the context of an AI-infused world? Cecilia Chan and colleagues from the University of Hong Kong have asked a similar question, from the perspective of educators. They suggest that there are key human aspects that AI can never replace, such as cultural sensitivity, resilience, relationships, curiosity, critical thinking, teamwork, innovation, ethics, civic engagement, leadership, etc. If these sound familiar, it’s because they’re also embedded in Sydney’s graduate qualities for students.

So perhaps a contemporary set of learning outcomes (and assessments) needs to address these human elements. The ability to be able to judge the quality of their own work and that of their co-pilot may be the most important quality we need to develop. Sure, generative AI can create text and other outputs that mimic these human qualities, but that enhances our prerogative to adapt assessments appropriately to an AI world.

Designing assessments in an era where generative AI is inescapable

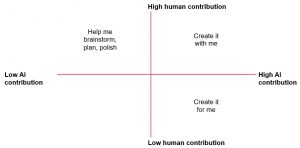

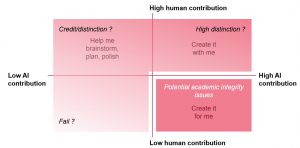

Consider this diagram, which we recently presented in the closing keynote of a UK conference on ‘Education fit for the future’ and had useful conversations on via Twitter afterwards. Much of the early dialogue around generative AI has been around its (mis)use in the bottom right quadrant – high AI contribution and low human contribution. As generative AI becomes more inescapable, we need to consider where along the top half of the diagram each of our assessments sit – and they will necessarily sit at different places, depending on the year level, learning outcomes, and other factors.

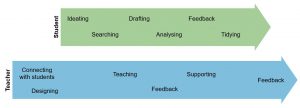

Leon Furze helpfully characterises this as an ‘AI assessment scale‘, where educators need to consider whether and how AI helps students in assessments. We’ve adapted his scale in the diagram below, to emphasise that AI can also help educators in the design and delivery of assessments.

What are some approaches to assessments that might work in the age of AI writing co-pilots, considering all we’ve discussed about process, authenticity, and learning outcomes? How might students work productively and responsibly in the top-right quadrant?

Co-creating an output with AI

There are many ways that industry and community groups are already and could potentially leverage generative AI, including content generation, customer insights, software development, media generation, document summarisation, knowledge organisation, and more. What are the steps that we and our students can take through assessments in this context? Here is a strawman for consideration:

- In your field or discipline, consider the kinds of authentic tasks and authentic contexts that your students may eventually find themselves meeting. This may be producing an annual report for stakeholders, a design proposal for a firm, a policy briefing for government, marketing copy for a client, a scientific report for a journal, a pitch deck for investors, an environmental impact analysis for a local council, a proposal for a museum exhibition, a clinical management protocol for a hospital, a citizen science project outline for the community, etc. At this stage, you don’t need to necessarily think about AI. (AI could help you, as an educator, dream up some of these ideas – see how).

- Ensure the assessment is aligned to your learning outcomes. If it doesn’t align, you may need to either refine your learning outcomes, or try a different assessment.

- Draft a marking rubric for the assessment, which is aligned to the learning outcomes. As part of this rubric, you may want to consider incorporating some of the very human qualities encapsulated in the Graduate Qualities as rubric criteria (rows). For example, you may want students to develop and demonstrate information and digital literacy skills, as well as inventiveness. As part of the rubric, consider adding criteria that will allow you to evaluate students’ approach to co-writing – see the next section.

- In the process of starting the assessment, have students design a good prompt for generative AI, and document this process. There are many considerations when designing a good prompt, and the quality of the initial prompt (and follow-up prompts) will determine the quality of the AI output. To design a good prompt that leads to a meaningful output, students will need to clearly specify the task, provide relevant context and examples, and even split complex tasks into subtasks or steps. You can already see that a meaningful engagement with generative AI relies heavily on students having developed some disciplinary expertise. It’s important for students to document this as it demonstrates their learning process, critical thinking, and the cognitive challenges they overcome.

- Once an acceptable draft has been generated by the AI co-pilot, have students critique and enhance this, and document what they do, how they do it, and why. The aim here is for students to improve the AI’s outputs by analysing its accuracy, judging its quality, integrating relevant scholarly sources (the AI is unlikely to yield any reliable sources) – all elements of developing evaluative judgement. Again, it’s important that students document what they do, how, and why, as it serves as a tool for you to assess the process.

- If there is space in your curriculum, implement a draft submission at this stage. Students can get feedback and critique from peers, who have also gone through a similar process of using an AI co-pilot.

- Since we are interested in assessing the process and not (as much) the outcome, at this stage have students submit the amended draft, their documentation (initial prompt and reasoning, and their analysis and improvement steps), their reflections on peer feedback, and their proposed changes going forwards. Assess these as the primary (i.e. most heavily weighted) component of the overall assessment grade – for ideas on how, see the next section.

- Have students improve the output again, documenting the process as before.

- Students submit the final output. This, and their documentation, is assessed, but has a lower weighting because we are interested in assessing the process, not the outcome.

Evaluating co-creation with AI

An integral part of the suggested model above is to be able to evaluate the process of making something with AI. Some of the following ideas may be useful criteria to include in the marking rubric, alongside other criteria. Ensure that the criteria that you include in your rubric align with your learning outcomes and the Graduate Qualities.

- AI prompt design that demonstrates disciplinary expertise: how thoughtfully the student has designed the prompt(s) for AI and considered the complexity and clarity of prompts. High distinction could include a well-structured and deep understanding of disciplinary concepts that are demonstrated in effective prompt design.

- Critical evaluation of AI suggestions: how effectively the student evaluates and utilises AI suggestions, as in whether they simply adopt AI-generated content or make conscious choices about what to include. High distinction could include critically making nuanced and evidence-based decisions about what to accept, modify, or reject.

- Revision process: how the student has revised AI suggestions and demonstrated their critical thinking skills and disciplinary expertise. High distinction could include an insightful and critical reflection on where AI generated content needed improvement, and why. It could also include a demonstrably significant improvement in the quality of the work.

- Information and digital literacy: how the student has evaluated AI-generated content through relevant scholarly sources to enhance the rigour and reliability of the output. High distinction could include the integration of high-quality sources that are appropriately critiqued.

- Documentation and reflection on the co-creation process: how the student has recorded appropriate decisions and interactions with the AI co-pilot, and analysed the strengths, weaknesses, and future improvements to these interactions. High distinction could include a clear and ethical articulation of decisions and their reasoning, deep insight into the role of AI in the co-creation process, and suggestions for future practice.

- Ethical considerations: students’ awareness of the reliability, biases, and other limitations of AI generated content. High distinction could indicate a strong understanding of these issues, with suggestions for mitigating potential problems.

These are by no means an exhaustive or prescriptive list. You will need to determine the most effective way to assess and evaluate these skills for your own teaching context.

In contexts where collaboration with AI is acceptable, authentic, and productive, it may be conceivable that a student who creates something with AI may learn more, better hone their critical thinking and information and digital literacy skills, and produce a better artefact. To very loosely paraphrase Ethan Mollick, students may be hurting themselves by not using AI, if AI-enabled writing is of a higher quality. Certainly, we need to be fiercely conscious of many issues of collaborating with AI, not the least because of anchoring bias where the first (in this context, AI-generated) piece of information clouds our judgement.

Assuring learning outcomes

Webb’s strategies of either avoiding, trying to outrun, or adapting to AI are not necessarily stark choices and our final position will no doubt be a mixture of all three.

We want to know our students (and our future bridge builders and dentists) know what they are doing: our purpose and social licence is based on our ability to assure that our graduates have met their learning outcomes. This will no doubt involve some secure summative tasks in degrees and programs which exclude technology to ensure program level outcomes are met. Given the inevitable play off between authenticity, security and cost, we need to consider the responsibilities of each unit versus those of the program. With generative AI baked into our productivity tools, this may involve appropriate methods of assurance in these exceptional ‘high stakes’ assessments. As Cath Ellis from UNSW suggests, perhaps we need to stop agonising about over-securing every single assessment. Assessments where students collaborate with AI may help them learn critical skills and develop deeper disciplinary expertise, whilst assessments that are highly secured may help us (and them) additionally assure learning outcomes are met.

If we are honest, our present assessment regime reflects our need to deliver efficiency, reflecting the workload implications of large enrolments and tight deadlines. Assessment for and of learning is not the only consideration that a unit coordinator tasked with returning results to a deadline and within a limited budget needs to consider. Whilst generative AI may offer some efficiencies in designing and delivering assessments, it also requires us to consider where and what to assess.

As the costs, security, and reliability of ‘traditional’ assessments soar, is this a time to think about assessing where learning happens – such as in the laboratory, in the design studio, and in the tutorial? At the moment, assessment weightings and hence student behaviours favour products (notably assignments and exams) whereas formative learning, development, and evaluative judgement occurs in the tasks in our active learning classes.

Adapting to AI will take time and will probably require a mixture of student skill building, staff development, and a paradigm shift in our understanding of what assessment is for. For students, this will involve foundational digital literacy in using AI (such as generic prompt writing) but this will eventually need to be a discipline-specific skill and part of their knowledge creation methodologies. For staff, we need to rapidly and widely build awareness and support the innovations of early adopters.

The ultimate aim of a Sydney education will perhaps not be changed by the generative AI revolution. Indeed, it should become more real and relevant. Our assessments should require, encourage, rank, and reward the ability to use evaluative judgement on the quality, reliability and relevance of the resources our students use and the outputs they produce.

What now, and what next?

- Consider the implications of AI co-pilots in your disciplinary context. Consider what impacts this will have on assessments, particularly written assessments.

- Given the HESF requirements, ensure that select assessments are appropriately secured so that your unit and program can demonstrate student achievement of learning outcomes. For other assessments, consider the above guidance on adapting to and embedding AI.

- Find out through industry and community groups and other stakeholders how AI is being used (or not) in your field, and where it is effective (or not). Use this to inform your decisions about teaching and assessment.

- A working group at the University is drafting a green paper towards a potential University-wide AI strategy. This will seek to help guide the University along a path of ethical, equitable, responsible, and productive use of AI in all facets of the University’s work. Wider consultations on this will be opened soon.

- For further reading, consider these resources:

- CRADLE from Deakin University has recently put out a thoughtful and high-level overview of assessment responses to generative AI

- Monash University has a thorough and practical guide to generative AI and its impacts on teaching, learning, and assessment, with a great new set of bite-sized 10 minute chats on generative AI

- We’ve also curated a bunch of practical ideas and examples of using generative AI productively and responsibly