Over the last ten months, I’ve had the privilege of speaking with hundreds of educators here and overseas about generative AI. The emotional and educational reactions range from despair to elation, and everything in between, and often a mixture as well. As a profession, we are still coming to terms with what generative AI means for teaching, learning, and assessment. In the process of doing so, a mythology has developed around ChatGPT and other generative AIs – and this can cloud how we engage responsibly and productively with these tools. Let’s have a look at some of the key myths.

It’s a big database

Because we can type a prompt into online generative AI tools like ChatGPT and Bing Chat and get an informative response, our minds draw parallels with search engines like Google or library databases. But generative AI works more like our brains, than like a traditional computer database. This first myth is an important one to dispel, as it impacts some of the other myths. Let’s explore further (keeping in mind that I’m not a computer scientist or a neuroscientist, but I am a programmer, biologist, and educator).

Generative AI is built upon artificial neural networks, which were originally designed to model the biological neural networks in our brains. Both neural networks are comprised of neurons that receive signals, integrate or process it, and then send signals to downstream neurons. Many, many neurons are interconnected to form a neural network. As we learn and experience the world, the connections between our neurons, and the strength of these connections, changes. In a similar way, when an artificial neural network is trained (or ‘learns’), the weighting of the connections between the artificial neurons changes in each round of training. Our brains have an estimated 100-150 trillion of these connections (between about 80 billion neurons); GPT-3.5, the artificial neural network underlying the free version of ChatGPT, has about 175 billion of these connections (the number of neurons is undisclosed), arranged in sequential layers. GPT-4, which is much more powerful, has been estimated to have about a trillion of these connections.

Artificial neural networks are trained on billions and billions of words derived from the internet, published and unpublished books, and more. I read somewhere that for a human to read for 8 hours a day the amount of words that GPT-3.5 has been trained on, it would take about 22,000 years. As the artificial neural network undergoes millions and billions of rounds of training, it iteratively adjusts the weights of the connections between all its billions of neurons. After training completes, the underlying strengths of the connections (‘weights’ or ‘parameters’) in its artificial neural network are set.

Then, when you type in a prompt, your words are converted into a massive array of numbers (‘vectors’) and fed into the artificial neural network (for an outstanding explanation, much clearer than I could ever write, check out this article on Understanding AI). Each layer of the network does different things – neurons in one layer might interpret the syntax of the language, whilst neurons in another layer might modify the tense of words, and neurons in another layer might connect similar ideas together, whereas neurons in another layer might relate names and locations to particular people and landmarks. The vectors are being modified as they pass through each layer until they arrive at the final layer, when they are converted back to words for you to read.

So when you prompt a large language model like GPT-3.5, which is primarily powered by an artificial neural network, it’s not using your prompt to perform a text search over a massive database. Rather, it’s functioning more like your mind, interpreting your prompt through billions of interconnected neurons to parse the meaning and context of your prompt, eventually producing an output.

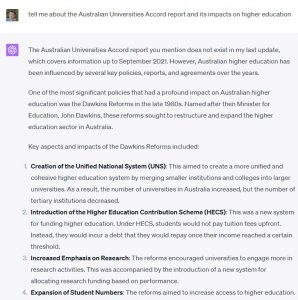

ChatGPT doesn’t know anything past September 2021

The GPT-3.5 and GPT-4 models were trained with a massive set of text that existed in the world up until the end of September 2021. So, this myth is partly correct – the underlying neural network has its parameters fixed once training on this dataset finishes. However, there are two issues with this myth. One is that there are many new models out there (beyond the GPT-3.5 and GPT-4 models that drive ChatGPT) that have been trained on corpuses that contain more up-to-date text. Also, importantly, there are many implementations of AI tools now that can connect AI models to the internet.

Take for example Bing Chat and Google Bard, which are available for free. Or, ChatGPT Plus with plugins – some plugins give ChatGPT the ability to download PDFs and other information. When you prompt these implementations, the AI will first think about what searches it needs to perform on the live internet to respond to your prompt, perform these live searches, read the web resources at the speed of AI, and then combine these with its underlying natural language powers (the trained artificial neural network) to produce an informed response, often referring accurately to real sources it found.

ChatGPT is learning from our inputs

Again, this is a half-myth. ChatGPT can better understand us if we have an extended conversation with it. For example, in a conversation we may prompt it to take on the persona of an expert Socratic tutor, only answering questions by asking more questions. It is then going to keep this earlier prompt in mind when responding to later prompts in the same conversation. Its ability to do this relies on its ability to look back through that same conversation, in what is called a ‘context window’. For GPT-3.5, the context window size is about 3,000 words, which means it can ‘learn’ from the most recent 3,000 words of your current conversation to inform its next response. This doesn’t change the parameters in the neural network at all, so this kind of learning is ephemeral.

AI companies want to keep improving their AI tools, so that they are safer and more helpful. To do this, some will retain your inputs (and the AI’s outputs) to help them improve their models. OpenAI (the company behind ChatGPT), for example, says that “ChatGPT… improves by further training on the conversations people have with it” and that your conversations help them to “better understand user needs and preferences, allowing our model to become more efficient over time”. How exactly this happens isn’t clear – but it’s unlikely that those big (and expensive) training runs are being conducted regularly, which suggests that the underlying neural network isn’t changing its parameters (or ‘learning’) from your conversations – at least in the short term.

Note that at least for ChatGPT, you can disable chat history so that your conversations aren’t retained for training purposes. Not all AI tools allow this, and some actually have very permissive privacy policies which can be a risk, so it’s always important to check the privacy policy and data controls for AI tools that you use.

AI doesn’t have the biases that humans have

This is an interesting myth, sometimes used to justify the use of these generative AIs in making judgements that humans would or should make, such as in screening job applications or marking student assignments.

Content on the internet has been, up to recently, predominantly human-generated. Not only do humans have many biases themselves, but the composition of authors of available content is also not always balanced. For example, Wikipedia has a notable racial and gender bias which the project has been actively trying to improve. This means that since generative AI’s artificial neural networks are trained on such content, they can also pick up these biases, leading to “models that encode stereotypical and derogatory associations along gender, race, ethnicity, and disability status”. As an extreme example, imagine that, as you were growing up, you only read books where doctors and CEOs were male – if someone asked you a prompt about who runs hospitals, you’d only be able to fathom a response that reflected this biassed input. Key women have tried to let the world know about this – and it’s important to keep this in mind when using these AIs.

Generative AI can’t get references right

Early in 2023, a common piece of advice for educators was that if we asked students to give us citations to papers, this would AI-proof our assessments because ChatGPT couldn’t give real references. Rather, it would ‘hallucinate’ (make up) references by stringing together words that seemed to fit together. When GPT-4 came out, people discovered that it was much less prone to hallucination but still could make up fake references. So we’re safe, right?

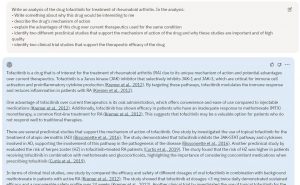

In the past few months, many AI tools have emerged that dispel this myth. One such tool is Assistant from scite.ai. It has a database of over a billion citation statements between real papers, allowing this AI tool to draw from real literature and provide meaningful responses based on the full text of the papers themselves and how and why papers cite each other. When you prompt scite.ai’s Assistant, it draws on this database and uses the language power of GPT to derive a more accurate response that cites real papers. However, Assistant is not free (although you can get two free goes) – which introduces real equity concerns for education and research. Elicit.org is another AI tool that draws from a large database of real papers and citation networks to provide real references to prompts.

AI can’t write reflectively

It’s commonly thought that generative AI performs better at lower Bloom’s taxonomy levels (e.g. remember, understand) than the higher ones (e.g. analyse, evaluate, create) – and that we can AI-proof assessments by targeting those higher-order cognitive skills. However, because generative AI doesn’t operate like a database and more as a neural network, it actually has problems with factuality compared to creativity and analysis.

Reflective writing is an interesting example. Reflection requires students to operate at the higher levels, analysing their experiences and creating meaning from them. As researchers have shown recently, “ChatGPT is capable of generating high-quality reflections, outperforming student-written reflections in all the assessment criteria” (emphasis added). The same researchers note that students could “exploit ChatGPT to achieve a high grade [on reflective assignments] with minimum effort. But this may deprive them of developing critical thinking and idea-synthesising skills that were purposefully designed for the reflective writing task, leading to fewer learning opportunities”. And it’s not just generic reflections – if you want students to write against a particular framework like Gibbs’ reflective cycle, all one needs to do is prompt generative AI to do this.

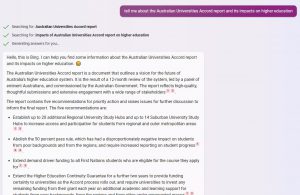

But ChatGPT can’t do calculations or interpret data or use contemporary class resources or…

Newer AI tools have emerged over the last few months that can:

- take in large quantities of text (e.g. Claude 2‘s context window is 100,000 ‘tokens’, or about 70,000 words),

- read and process uploaded files (e.g. Bing Chat and Google Bard can accept image inputs, Claude 2 can accept document uploads), and

- analyse PDFs and data files (e.g. ChatGPT Plus with plugins can extract information from PDFs on the internet, and ChatGPT Plus with Code Interpreter can design and execute Python scripts to analyse uploaded spreadsheets).

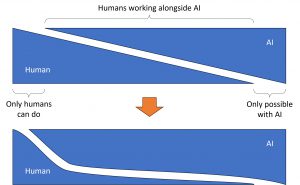

These have all appeared in the space of a few months in 2023 – it’s likely that the pace of AI advancement will continue, so it’s difficult to predict what will be available in 6-12 months. Tasks that were once solely in the domain of humans are now increasingly able to be addressed completely by, or in conjunction with, AI.

Recently, I was speaking with a Finance academic who was genuinely curious about AI’s capabilities for their assessments. (I’m going to preface these examples by saying I know nothing about this field). In one assignment, students need to use a company’s most recent annual report to calculate key efficiency ratios. After explaining to us how it would do this, ChatGPT Plus with plugins proceeded to perform the calculations, drawing from data in the annual report, and even telling us which pages the information came from. In another assignment, students need to use the annual report and other information to provide a buy/hold/sell recommendation. After providing a summary from the annual report of relevant metrics and suggesting aspects that should be considered, ChatGPT refused to provide a stock recommendation because it (rightly) didn’t want to provide financial advice. However, after some further coaxing (prompting), we were able to have ChatGPT generate hypothetical recommendations, and then critique its own recommendations. The academic noted the AI’s output would receive at least a distinction in their final assessment.

My authentic assessment is AI-proof

Authentic assessments have many benefits – including increasing student “autonomy, motivation, self-regulation and metacognition”. However, there’s a misconception that authenticity makes assessment cheat-proof. However, this is not the case – it turns out that “assessment tasks with no, some, or all of the five authenticity factors [including match to professional practice, cognitive challenge, wider impact, and feed forward loops] are routinely outsourced by students”. Authenticity will also not make an assessment AI-proof – as in, making it so that AI cannot complete it.

As we’ve seen for the myths above, AI can bring in real, contemporary references, and write in personal and reflective ways, as well as use material from the internet or our classes. So, should we give up on authentic assessments? By no means – it’s important to think of authentic assessment as assessment that can motivate and inspire students to do the work for themselves, or collaborate with AI in productive and responsible ways so that they are still learning what they need to, through the process of completing the assessment. Our two-lane guidance for assessment design in the age of generative AI highlights this – we need ‘lane 2’ assessments to be as authentic as possible to function as ‘assessment for learning’ and ‘assessment as learning’.

AI-generated text can be easily detected

Understandably, a common reaction to these uncanny abilities of AI is to rely on detection, and the deterrence factor that this brings. However, people have identified many pressing issues with software (powered themselves by AI) that aims to detect AI-generated text. Researchers have also found that AI detectors can be biassed against writers from non-native English speaking backgrounds, partly due to the detectors relying on detecting characteristics of AI-generated writing that are similar to writing characteristics of non-native speakers. Other researchers have found that “the available detection tools are neither accurate nor reliable and have a main bias towards classifying the output as human-written rather than detecting AI-generated text. Furthermore, content obfuscation techniques significantly worsen the performance of tools”. The latter point is important – there are many ways, automated and human, to modify AI-generated text so that it becomes undetectable. Interestingly, Vanderbilt University has recently decided to disable their use of a commercially-available detection tool – their announcement contains a thoughtful analysis of their decision.

Beyond AI-based detection of AI-generated text, researchers have also looked into what happens when humans try to detect AI text. A recent paper asked human markers to examine papers that were potentially written by students or AI. The authors found that if markers were primed to consider whether text was student- or AI-generated (and to be honest, who isn’t these days…), they not only tended to raise their standards, but also doubted that well-written work or honest mistakes such as irrelevant literature would have come from humans. Human markers also looked for factors that they thought were characteristics of AI-generated text such as “inadequate argumentation, lack of references from the course literature and unrelated to the course content” – but these are also issues in human-written text.

There are therefore very important considerations surrounding detection, including unfavourable bias by both human and AI detectors. It’s also important to consider it’s possible that, if we are moving towards a future state where authentic assessments will involve the use of AI, the need for detectors may dissipate in this context.

It’s hard to learn and use

Everyone’s workload is stretched beyond imagination. Finding the time to explore a new tool, especially one as unfamiliar as AI, is difficult. Not only this, but we’re going to need to rethink many of our assessments. All of this is true, and there are systemic issues with workload in higher education – and the appearance of generative AI on the scene certainly hasn’t helped.

But perhaps generative AI isn’t just part of the problem, but could be part of the solution as well. When the internet appeared on the scene, or smartphones, there were threats to education as well as opportunities. Generative AI is similar – the way that we learnt to use the internet and smartphones effectively for education was really to just use it. There weren’t user manuals to read or instructions to follow – we all built up collective familiarity with these ‘general purpose technologies‘ over time. At least with generative AI, it’s easy to access and you work with it through natural language.Generative AI feels strange and new. In many ways it is. But by considering how to use it to empower you as an educator, understanding a bit more about how it works and the mythology surrounding it, and just by trying it out and using it as part of your day-to-day work, you’ll discover and share how to use it, and how not to use it, productively and responsibly.

Tell me more!

- Check out our resources on using AI for learning, teaching, and assessment

- A version of this post also appeared on Linkedin