2022 ended with a tremendous amount of discussion on artificial intelligence (AI) and the trend has continued in 2023. People on social media are amazed by ChatGPT, a generative AI fine-tuned from a model based on GPT-3 that we covered here just half a year ago. Even mainstream media has picked up on the hype and implications of ChatGPT for education. Despite its powerful capabilities, AI is not magic; to use AI in a meaningful way that makes learning more productive, teachers and students need to have a realistic understanding of the capabilities and limitations of the currently-available AI systems. Neither is it scary. It is a new tool that builds on familiar technology like Google search and predictive text and does not replace the creativity and critical processes that we seek to develop in our students. In this article, we highlight some important features of these AI systems and discuss what teachers and students should be aware of when using them.

Check out the other resources in our AI and Education at Sydney collection.

ChatGPT models language, not knowledge

Generative AI can create new content that is similar to the examples it was given during its training process. The popular ChatGPT is a transformer-based generative language model that is trained on a large dataset of text (such as books, webpages, and other written materials), fine-tuned using conversations provided in a dialogue dataset and optimised to interact with users in a conversational way. ChatGPT is a ‘large language model’, and is designed to “predict the next word based on previous ones”. In a way, it is a very souped-up version of the ‘Smart Compose’ feature that we use every day in Gmail and Outlook, which is an AI-powered feature to suggest complete sentences in our draft emails.

This is important because the human-sounding sentences that ChatGPT generates, whilst undoubtedly impressive to read, are based on the probability of words appearing after other words; ChatGPT knows these probabilities because it has been trained on so many phrases, sentences, and texts written by humans. Unlike Smart Compose which predicts the next few words in our email programs, though, ChatGPT can generate large swathes of convincing text that (probabilistically) respond to prompts that it is given. ChatGPT sounds human because it is algorithmically replicating human language – not because it understands the concepts that it has been asked about. It models language, not knowledge.

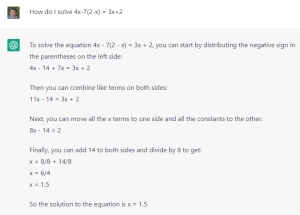

It would not surprise us, then, that ChatGPT may not be able to provide a correct response to a simple mathematical prompt, such as “How do I solve 4x-7(2-x) = 3x+2”. The screenshot to the right (replicated in text below) demonstrates one of ChatGPT’s responses; each time it is provided a prompt, it generates a new (and different) response.

To solve the equation 4x - 7(2 - x) = 3x + 2, you can start by distributing the negative sign in the parentheses on the left side: 4x - 14 + 7x = 3x + 2 Then you can combine like terms on both sides: 11x - 14 = 3x + 2 Next, you can move all the x terms to one side and all the constants to the other: 8x - 14 = 2 Finally, you can add 14 to both sides and divide by 8 to get: x = 8/8 + 14/8 x = 6/4 x = 1.5 So the solution to the equation is x = 1.5

What’s important to note about this response is that it sounds eerily similar to how a tutor may guide a student through solving the problem. It also sounds correct, and actually is correct until close to the end. Each time ChatGPT was given this prompt, it gave us a different explanation, and a variety of answers from x = 1.5, to 2, to -2, to -8/3, etc. But if we remember that ChatGPT is a language model, it should not be surprising that its response emulates human language, not human knowledge. (That said, some AI tools that are marketed to students such as Caktus are designed to solve these problems with ease).

AI responses may sound authoritative

Although ChatGPT gave a wrong answer as demonstrated above, it presented its response in a convincing manner (e.g., providing step-by-step instructions) that could confuse novices or anyone not reading it carefully. In fact, any responses the AI generates are usually presented in a confident and persuasive way; this is related to its training process, where ‘longer answers that look more comprehensive’ were favoured. Therefore, teachers and students need to bear in mind that although responses from large language models such as ChatGPT may appear sensible or authoritative, their ‘outputs may be inaccurate, untruthful, and otherwise misleading at times’ as ChatGPT’s creators warn. Unless the AI is specifically designed to express uncertainty and leave room for other perspectives, the way it responds could be misleading. This is important for education because the outputs of large language models don’t necessarily replace domain knowledge from a knowledgeable human.

The information and sources provided by AI may not be real

In addition to text, generative AI can be trained to generate other types of output, such as images and computer code, that are novel. This can be beneficial in some situations, and a drawback in others. Four years ago, NVIDIA’s StyleGAN AI was used to power a website (https://thispersondoesnotexist.com/) that can generate realistic images of people who do not exist. Since then, there have been many interesting generators that use AI to create random but realistic images or text (more at https://thisxdoesnotexist.com/). These have benefits in helping with boilerplating and creativity.

However, in the context of higher education where knowledge synthesis is key, the ability to create things that don’t exist becomes problematic. Two weeks before ChatGPT was released, Facebook’s parent company Meta introduced its latest language model Galactica which was trained on over 62 million documents including 48 million scientific papers. However, Galactica was taken down within three days because the AI was found to generate not only biased or incorrect information but also research papers that didn’t exist. In its responses, ChatGPT will usually not cite any research papers or other sources. However, when requested, it will happily amend text that it has already written and provide citations and even a reference list. Although, because it is a language model, these sources may not exist, even though they may look quite convincing to a marker and students themselves. In an attempt to address this, Perplexity AI is a new tool, in part powered by GPT3, which aims to address this by scouring the internet for real sources that it then cites in generated text. The ability of AI to generate information and sources that don’t exist, but sound authoritative whilst doing so, emphasises the importance of a knowledgeable human.

ChatGPT appeared on the scene in late November 2022, and has already sent shockwaves through the worlds of education, knowledge creation, and creativity. AI will only keep improving at breakneck speed, even if the current generation of AI has limitations around factual accuracy and overconfidence. The recent commentary across the globe around ChatGPT is that we need to embrace it as a new tool that has the potential to transform knowledge industries. In our next article, we will provide practical suggestions about how we can positively work with AI within higher education right now to make learning, teaching, and assessment more productive, efficient, and creative.

3 Comments