The University’s ‘two-lane approach’ to assessment, summarised below in Table 1, focuses attention on the legislated need to ensure our graduates have met the learning outcomes of their courses in a world where generative AI is ubiquitous and can mimic many of the products of ‘traditional’ assessments. We need to ensure that our graduates have the ability to learn and prosper in this world (e.g. through appropriately-designed lane 2 assessments) and be able to demonstrate the knowledge, skills and attributes detailed in the course learning outcomes (with validation coming through lane 1 assessments). As summarised below and detailed in our assessment principles, the approach calls for assessment design at the program-level, as much as possible, with lane 1 assessment in which the use of AI is controlled validating attainment at relevant points throughout and at the end of each student’s study.

In this article, we detail what program-level assessment design means and why it is vital to ensure we and other universities successfully shift to assessment models for the age of generative AI.

What is program-level assessment?

Program-level assessment (sometimes also called ‘Integrated Programme Assessment’, programme-focussed assessment or systemic assessment) focuses on stage- and program-level learning outcomes (PLOs). This speaks to assessments that are designed to build progressively and holistically through the degree to support and validate attainment of these PLOs for each individual. This requires planning and design to extend beyond individual units of study. The assessment design and associated feedback encourages students to consolidate learning and connect concepts from different parts of their program, both within units at the same level of study and across the years. With learning validated at important progression points within the program, students are supported to develop and advance from transition to graduation.

Is it the same as ‘programmatic assessment’?”

No – the term “programmatic assessment” is deliberately not used in the assessment principles in the Coursework Policy or Assessment Procedures. Programmatic assessment is a detailed area of scholarship with existing and extensive usage, in medical education in particular. Programmatic assessment is associated with detailed assessment planning to enable longitudinal data to be regularly captured and used to inform educators and learners on their progress (Van der Vleuten et al. 2012). For our programs which are competency based, such as dentistry, medicine and veterinary science, a fully programmatic approach may be warranted. In most of our degrees, though, it is not.

What are the advantages for students and educators of program-level assessment?

- Holistic design: assessments designed exclusively at a unit level can lead to a siloed approach, leading to shallow learning (Tomas & Jessop, 2018; Whitfield & Harvey, 2019) and difficulties in supporting the development of each individual student. A focus on the outcomes at the disciplinary level such as the majors in liberal studies degrees increases their coherence, and the skill and knowledge development in the contributing units (Jessop & Tomas, 2017).

- Assurance of learning for all students: by focussing on validating PLOs through cycles of introduction, development and assurance, it is much easier to demonstrate that these are met for each student whatever their route through the degree. We award degrees, after all, on the basis of attainment of the PLOs (Charlton & Newsham-West, 2022).

- Assurance of learning and academic integrity: as discussed below, advances in technologies such as generative AI make validating achievement increasingly difficult, time consuming, stressful and intrusive. It is pedagogically and practically better to purposefully plan, oversee and conduct secured assessments at selected points in the program.

- Increased utility of feedback: with PLOs introduced, developed and assured through selected points in their studies, students can use feedback and feedforward to improve on subsequent tasks (Boud & Molloy, 2013).

- Coherent development and support for students: by focussing on the stages of their program, the learning and support for each student can be designed to be a coherent journey (Boud & Molloy, 2012; Jessop, Hakim & Gibbs, 2013). This could include deployment of diagnostic assessment of mathematics or language ability at the start of the program and the selection of suitable progression points such as the end of first and second year. In turn, assessment and feedback design can purposefully develop students into self-regulated learners.

- Volume of assessment and workload: designing and planning assessment which focusses on the program-level learning outcomes can reduce the number of required assessments and assessment load:

- As discussed below, the significantly increased difficulty in securing assessment in an age where ubiquitous generative AI technologies can mimic humans and traditional assessment outputs means that such assessment will become even more time consuming and needs to be designed more deliberately and deployed more selectively.

- Planning assessment at the program level reduces stress, and deadline and marking bottlenecks. Analysis of assessment load for students with typical enrolment patterns in our degrees shows just how the assessment becomes congested when it is planned only at the unit level.

- By reducing siloing of learning and assessments in units of study, there is less likelihood of repetition as reported by students.

- By concentrating supervision into lane 1 assessments, there is the possibility of reduced marking loads and academic integrity administration.

As Harvey, Rand-Weaver & Tree (2017) put it:

A program-level approach to assessment at Brunel University reduced the “summative assessment burden by 2/3

The role of program-level design in assuring learning in the Higher Education Standards Framework and in our assessment principles

The assessment principles in our Coursework Policy follow the TEQSA ‘Assessment reform for the age of artificial intelligence’ and the Group of Eight principles on the use of generative artificial intelligence in referring to program-level assessment. These all in turn follow from the Higher Education Standards Framework and from accrediting bodies that require demonstration of learning across a course.

Assessment principle 5 in the Coursework Policy states that “Assessment practices must be integrated into program design”. This principle requires that:

- assessment and feedback are integrated to support learning across units of study, courses, course components and year groups;

- assessment and feedback are designed to support each student’s development of knowledge, skills and qualities from enrolment to graduation;

- students’ learning attainment can be validated across their program at relevant progression points and before graduation;

Program-level assessment design thus requires alignment of assessment across units (a), with deliberate consideration of individual development and validation at relevant points in the course as well as before graduation. Because of the effect of generative AI on many of our existing assessments, principle 6 also requires this is performed in a “trustworthy way” with “supervised assessments [that] are designed to assure learning in a program”.

“Program” here includes both courses and their components, such as majors and specialisations. The requirements of principle 5, though, mean that validation at regular points must occur. In degrees like the BSc, BLAS, BA and BCom, these points could mean once a student has completed the degree core, the first year or the second year. The points at which it is most useful to validate learning, through lane 1 assessment, are best decided by the course and component coordinators.

The importance of program-level design in the two-lane approach to assessment

The ‘two-lane approach’ to assessment, summarised in Table 1, reflects the reality of teaching, learning, assessment and preparing students for their careers in an age where technologies such as generative AI are pervasive and ubiquitous. Lane 2, which focusses on assessment for learning, is likely to be where we scaffold and teach students how to productively and responsibly engage with generative AI. Like other skills, this is best approached at a program-level to ensure a developmental approach and avoid repetition. We focus on lane 1 here, however, as that is where the necessity and advantages of the problem-level approach are most stark.

In 2024, we are still near the start of the generative AI revolution but it is clear that these tools can already mimic human outputs, accurately create content (including written work, images, music, video and the spoken word) and answer questions. Even now, detecting that an output has been produced by generative AI rather than a human is unreliable at best, relying mostly on catching under-skilled use of the AI. Similarly, even in 2024, distinguishing the voice and facial movements of an AI avatar on a screen from those of a real human is difficult and perhaps requiring expertise. Where it is important to reliably understand and measure what a student is capable of without using AI or using it in controlled ways, assessment must be secured – this means in person and with the appropriate supervision needed to restrict the use of prohibited supports. Given the difficulty and undesirability of securing all assessments, it is better to identify where in the program to place these lane 1 assessments.

Table 1: Summary of the two-lane approach.

| Lane 1 | Lane 2 | |

| Role of assessment | Assessment of learning | Assessment for and as learning |

| Level of operation | Mainly at program level | Mainly at unit level |

| Assessment security | Secured, in person | ‘Open’ / unsecured |

| Role of generative AI | May or may not be allowed by examiner | As relevant, use of AI scaffolded & supported |

| TEQSA alignment | Principle 2 – forming trustworthy judgements of student learning | Principle 1 – equip students to participate ethically and actively in a society pervaded with AI |

| Examples | In person interactive oral assessments; viva voces; contemporaneous in-class assessments and skill development; tests and exams. | AI to provoke reflection, suggest structure, brainstorm ideas, summarise literature, make content, suggest counterarguments, improve clarity, provide formative feedback, etc |

How much of our existing assessment can be considered secure?

Table 2 below lists the number of each assessment type running in units in 2024 together with the relative weighting, an estimate of the number of student submissions across the year and an indication of the likely level of security. The relatively low volume of secure assessment is not necessarily a problem here. Given the comments above about how difficult it is to genuinely secure assessment, it is probably a good thing. However, without program-level design guiding where these secure assessments are deployed, it is very difficult to demonstrate the program-level assurance of learning that is legislatively required to award degrees. The ordering in the table reflects the percentage weighting and this alone suggests that we are relying on highly-weighted and insecure, take-home assignments in many of our programs to assure learning.

Generative AI technology is rapidly improving and is already being built into wearables, such as glasses and earpieces. It is no longer science fiction to imagine this extending to devices which interface directly with the human brain. Securing assessment even in face-to-face environments such as exam halls is already difficult and intrusive. It will get harder. Trying to secure all assessments in units of study is untenable from a staff and student workload point of view and would displace assessment for learning.

Table 2: Assessment types for all units of study running in 2024, together with the number of times used, the relative weighting and an estimate of the number of student submissions / sittings. The latter assumes that each enrolled student completes the assessment once (and only once). The colour coding indicates whether the assessment is likely to be secure (pink: ■), unsecure (green: ■) or where this is unclear (blue: ■) from the present assessment type descriptor. To estimate group submissions, a group size of 5 has been assumed. ‘Weighting’ is the sum of the weightings across every unit for each type and then divided by the total number of units.

| Type | Number | Weighting (%) | Submissions | Likely level of security |

| Assignment | 10815 | 40.8 | 704895 | Unsecure |

| Supervised exam | 1779 | 12.5 | 214543 | Secure |

| Creative / demonstration | 1688 | 9.1 | 38335 | Unclear |

| Presentation | 2678 | 7.3 | 88070 | Unsecure |

| Small assessment | 1914 | 6.2 | 145154 | Unsecure |

| Participation | 2056 | 3.6 | 162536 | Unsecure |

| Honours thesis | 337 | 3.5 | 5842 | Unsecure |

| Online task | 2144 | 3.4 | 290028 | Unsecure |

| Skills-based | 1614 | 3.2 | 109488 | Unclear |

| Small test | 1348 | 2.8 | 142025 | Unclear |

| Tutorial quiz | 1028 | 1.6 | 130428 | Unclear |

| Supervised test | 447 | 1.6 | 67033 | Secure |

| Dissertation | 125 | 1.4 | 901 | Unsecure |

| Placement | 1160 | 1.3 | 46723 | Unclear |

| Short release assignment | 344 | 1.2 | 50378 | Unsecure |

| Oral exam | 42 | 0.2 | 3982 | Secure |

| Practical exam | 22 | 0.2 | 2268 | Secure |

| Attendance | 135 | 0.1 | 15864 | Unsecure |

| Practical test | 11 | <0.1 | 1535 | Secure |

| Oral test | 6 | <0.1 | 142 | Secure |

How can we move to program-level design in our assessment?

The ‘traditional’ modular or unit of study based approach is the most common assessment practice worldwide (van der Vleuten, Heeneman & Schut, 2020), in Australia (Charlton & Newsham-West, 2022) and, outside our professionally-accredited degrees, at the University of Sydney. It is recognised that many of our degrees are very flexible with many pathways and ways of progressing through them and graduation dependent only on compiling sufficient credit. However, it is likely that they are also not compliant with the Higher Education Standards Framework legislation as we cannot assure learning in them. The need to align our assessment practices with the two-lane approach to achieve this necessitates that we work towards program-level planning and design across all of our degrees over the next couple of years. There are a number of ways we can start this journey.

Assessment plans

A good starting point is for disciplines to discuss and revisit their assessment plans in light of the two-lane approach and the new assessment principles. In the Learning and Teaching Policy, for liberal studies degrees, assessment plans are required for: majors; streams programs; and degrees. For professional and specialist degrees, assessment plans are required for streams and degrees, and optional for majors.By mapping curriculum learning outcomes and graduate qualities against units and assessment tasks, assessment plans can be used to plan and review where outcomes (especially PLOs) are assured. Assessment data held in Sydney Curriculum now makes it possible to map assessment load, types and timing for combination of units based on degree component structures or common enrolment patterns.

Examples of integrating program-level assessment into selected units of study

- Use of core or a capstone unit of study to assess PLOs. Whilst this may require some curriculum re-design for some programs, if there are core units in a course or course component, these can include assessment that requires students to integrate knowledge and skills from across multiple units. In the case of a capstone unit in a major, it is appropriate to assess the learning outcomes of the program.

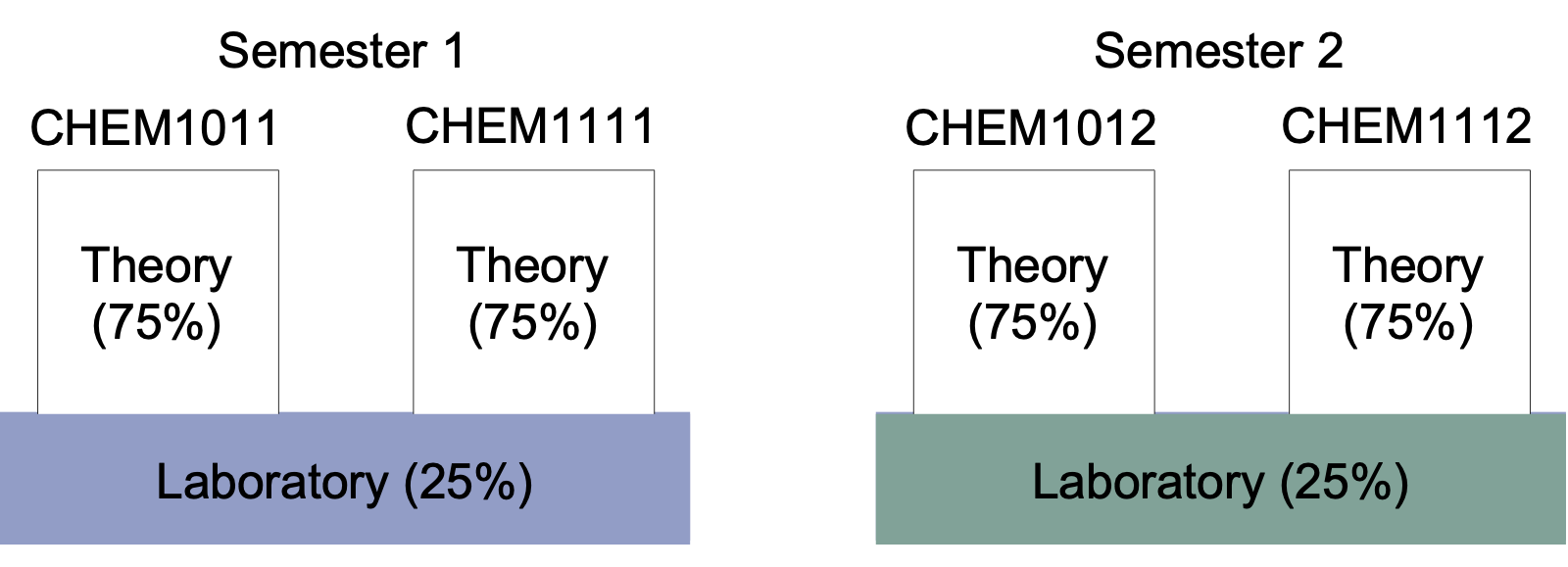

- Use of common assessment tasks across multiple units of study. For example, in first year chemistry, students without knowledge equivalent to HSC Chemistry select the ‘fundamentals’ units (CHEM1011 and CHEM1012) whilst others select the ‘mainstream’ units (CHEM1111 and CHEM1112). The theory sections are different but the laboratory tasks and their assessments are common to both, as illustrated below. The laboratory component is considered a core skill so the laboratory assessments are hurdle tasks.

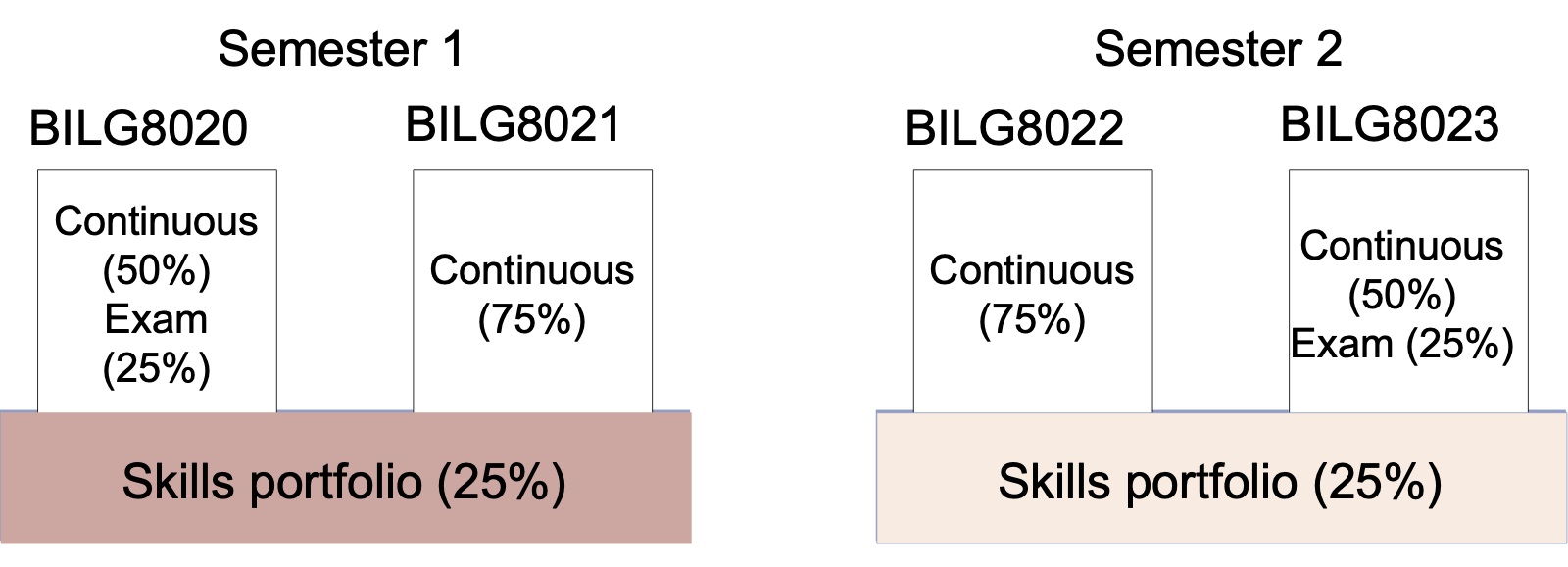

In Biological Sciences at the University of Edinburgh, a similar approach is used in the year 1 and year 2 compulsory courses, with a common personal development and skills portfolio that covers four units but is only assessed twice and makes up 25% of the assessment in each. Note in this example, students take all four units.

In Biological Sciences at the University of Edinburgh, a similar approach is used in the year 1 and year 2 compulsory courses, with a common personal development and skills portfolio that covers four units but is only assessed twice and makes up 25% of the assessment in each. Note in this example, students take all four units.

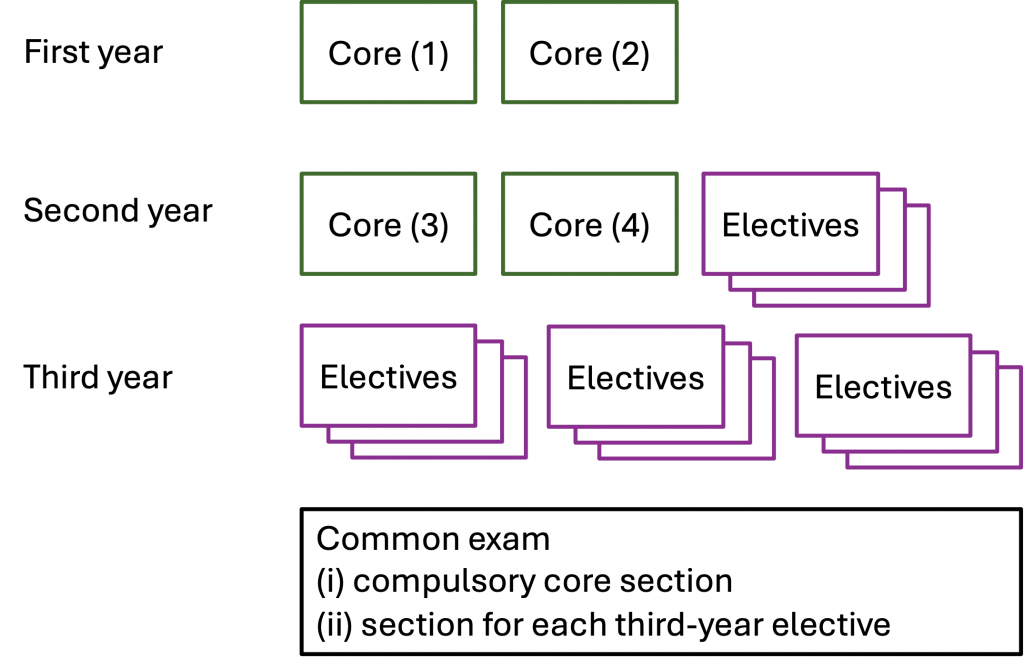

- Use of a common exam across multiple units of study. The approach taken in (2) can be extended to summative validation of knowledge through a common exam across multiple units. Core knowledge and program outcomes are tested through compulsory questions with students then choosing from the remaining sections based on their electives. This approach would act to reduce the number of exams required, ensure the core knowledge of the discipline is tested and encourage students to consolidate their understanding across the program. Such an approach might suit the third year of a major where the core knowledge of the discipline is developed in first and second year. This is illustrated below for a “2 + 3 + 3″ major where students take 2 units in first year, take 3 units in second year (including 2 core units) and choose 3 units from a pool in third year. It might also suit a major with a ” 2 + 2 + 4″ structure where students take 2 core units in first and second year and select 4 units from a pool in third year, as illustrated below.

It might, however, also suit a first year program to again reduce the number of exams required and focus assessment on the core outcomes of the degree. Although such an approach might seem radical from the perspective of 2024, it resembles that used from a few decades ago at Sydney and in some HSC subjects which have core and elective modules. - Use of a capstone unit of study. A core unit at the end of a major provides a suitable place to validate the outcomes of the program through secure assessment such as an oral or written exam, a project or placement.

- Use of common learning outcomes in the units of a major. Alongside core knowledge, program learning outcomes should focus on the key skills and methodologies that define the discipline such as critical thinking, creative writing, application of the scientific method and quantitative approaches to problem solving. If these can be equivalently assessed through the examples and the different contexts presented in the electives, then the program level outcomes are assured and variety is maintained. For example, a learning outcome such as “locate, identify, analyse and contextualise materials that provide insight into the past” can be assessed equivalently in a unit on any period of history.

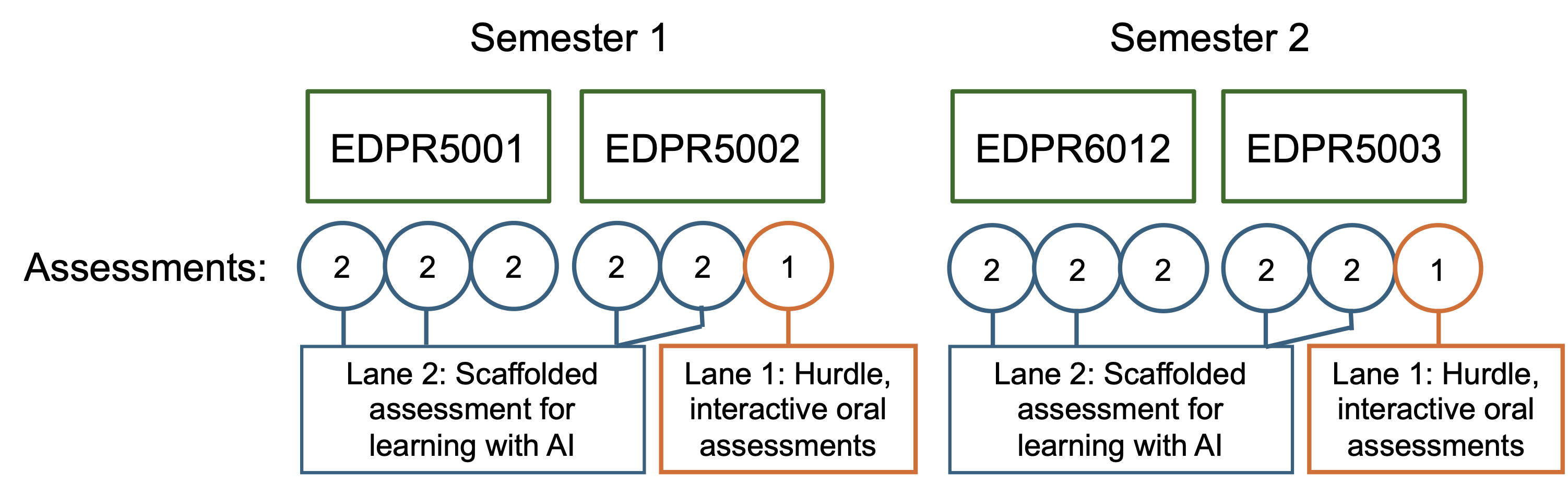

- Combining hurdle tasks and units with no lane 1 assessments. Assurance at the program level leads to the freedom to have units where only assessments for learning (i.e. lane 2) exist. The Graduate Certificate in Educational Studies (Higher Education), a postgraduate qualification aimed at university teachers, consists of 4 units of study with 2 taught each semester. As illustrated below, in 2024, this has been running in a structure with hurdle tasks in 2 of the units to assure learning across the program. The other 2 units have no assessments of learning (i.e. lane 1). Students taking the course take EDPR5001 in semester 1 and EDPR6012 in semester 2. These units award grades but could equally well be set up to be “pass/fail“. The assessment style chosen to assure learning in both EDPR5002 and EDPR5003 is the interactive oral.

- Milestone and stage-gate assessments. Rowena Harper, Deputy Vice-Chancellor (Education) at Edith Cowan University, has recently introduced these related assessment types. Milestones are points at which progress towards PLOs are assessed. They act to either confirm progression or to inform learning support. Milestones could occur within units of study – such as in the core units in (3) above – or once a number of units have been completed. ECU’s stage-gate assessments fulfil a similar function but can be used to restrict progression to the next level of the program and to validate achievement.

Tell me more!

- Frequently asked questions about generative AI at Sydney.

- Rules, access, familiarity, and trust – A practical approach to addressing generative AI in education

- Menus, not traffic lights: A different way to think about AI and assessments

- Embracing the future of assessment at the University of Sydney

- Programme-level assessment: What is it, and why is it important? (Patrick Walsh and Neil Lent, University of Edinburgh)

- Programme-level assessment (University of Reading, 2024)

- CRADLE Seminar Series: Assessment beyond the individual unit/module: What it is, and why it matters more than ever in the age of AI? – recording of the seminar

3 Comments