Over the past few weeks, we have held a number of roundtables and open forums with staff across the University around how we can productively engage with artificial intelligence at Sydney. This FAQ is a collation of key issues from these discussions, and will help you to better understand how to approach generative AI this semester. For more information about this, see our collection of resources that talk about what generative AI is, and how it might be useful for teachers and students.

This page was last updated 16 May 2024

Access

Is there a University GPT-4 licence?

GPT-4 is one of the most powerful generative AI models currently available. We recommend that you use GPT-4 (or a similarly powerful model, like Claude 3 Opus), instead of the more dated and less powerful versions (like GPT-3.5 which is available on the free version of ChatGPT).

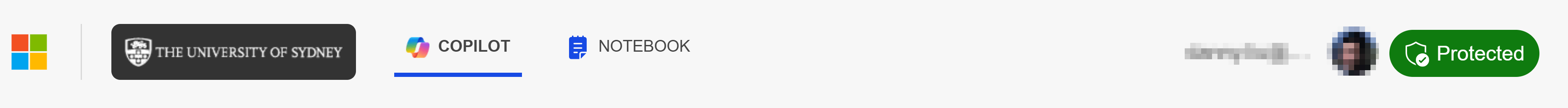

Staff and students at the University of Sydney have access to ‘Microsoft Copilot’ (used to be called Bing Chat) at https://copilot.microsoft.com via Edge or Chrome browsers. Ensure that you are signed into your University account and you will see the University logo in the top left, and a ‘protected’ badge in the top right:

The data security classification (what data can be put into the platform) is ‘protected data‘: data that is not generally available to the public. However, as data will briefly transit overseas (USA), in order to comply with privacy laws, staff and students should not input personal information about themselves or other people, and should not input commercially or research sensitive data, particularly where research areas (e.g. National Security) or contractual obligations limit transborder data flow. When using Microsoft Copilot, it is important to use the protected experience of Copilot as described above. University of Sydney staff can view the Knowledge Base article about Copilot for more guidance.

The other option for University staff and students is to use the ‘Vanilla GPT-4’ agent in Cogniti. This gives access to a more ‘raw’ GPT-4 experience.

How can my students and I access good AI tools?

For text-based generative AI, we recommend accessing GPT-4 through the channels above.

For image generation, University staff, and students in some faculties, have access to Adobe Firefly at https://firefly.adobe.com.

For other generative AI such as audio generation, and video generation, the University does not have centrally-licensed access to these as yet.

The policy and assessment frameworks

The University of Sydney’s guardrails around generative AI

The University has published guardrails around the use of generative AI in education, research, and operations. These are available on the Intranet. Briefly, these guardrails are:

- Do not enter confidential, personal, proprietary or otherwise sensitive information

- Do not rely on the accuracy of outputs

- Openly acknowledge your use of AI (e.g. educators must model best practice by being transparent and clear with students about how these tools are used)

- Check for restrictions and conditions (e.g. for funding agreements, journals, etc)

- Report security incidents to the Cybersecurity and Privacy teams

- Follow any further guidance specific to your work (e.g. around education, or research)

What is in the policy about generative AI use by students?

Students are permitted to use generative AI for learning purposes, as long as they follow all policies such as the Acceptable Use of ICT Resources Policy 2019 and the Student Charter 2020.

For assessment purposes, the Academic Integrity Policy 2022, which came into force on 20 February 2023, mentions these technologies in three key places.

- Clause 4(9)(2)(j)(i) states that it is an academic integrity breach to inappropriately generate content using artificial intelligence to complete an assessment task.

- Clause 4(13)(1)(i) states that submitting an assessment generated by AI may be considered contract cheating.

- Clause 5(16)

- (2) mentions that students may only use assistance, including automated writing tools, if the unit of study outline expressly permits it.

- (5)(b) mentions that students must acknowledge assistance provided when preparing submitted work, including the use of automated writing tools.

This means that by default, students are not permitted to use generative AI to complete assessments inappropriately. However, as a University, we want to grow students to be ethical citizens and leaders, and it is clear that these technologies will form an important part of our collective future. Therefore, the policy allows unit coordinators to permit the appropriate use of these technologies, including in assessment. What this looks like will differ depending on the unit, discipline, and other factors. In these situations, coordinators need to make clear to students what is meant by appropriate use, and students will need to acknowledge how these technologies have been used in the preparation of assessments.

Can I use pen and paper exams?

Given the potential for generative AIs to create novel text that will not be detectable by traditional text similarity tools, there is an understandable concern that these technologies could be used to generate submitted work that may not reflect a student’s own level of competency. Invigilated tests and exams are still a viable assessment type to authenticate student proficiency, as long as they fit within the assessment and feedback framework – however it must be noted that these have limited authenticity and inclusivity.

In the medium-to-long term, we need to move to a place where we simultaneously have assessments that do assure learning (and some of these may well be pen-and-paper exams, but hopefully also include other more authentic and secured assessment), and assessments that motivate and inspire learning. For more information, please check out the article: What to do about assessments if we can’t out-design or out-run AI?

What do I do about my assessments and teaching?

How do I know if my assessments are affected?

Consider trying out the generative AI tools to see what sort of output it would produce for your assessment. For written assessments, you might find it informative to paste in the assessment prompt that is usually given to students into ChatGPT – this prompt or follow-up prompts can include some background information about the assessment, the desired learning outcomes, and the question(s) itself. Have the tool generate a few responses to the prompt, and consider the quality of its responses.

What do my students need to know?

We strongly recommend speaking with your students about generative AI. We also strongly recommend that you set clear guidelines about whether and how these technologies might be appropriately used to support their learning in your unit. Students need to also be aware of the Academic Integrity Policy 2022, alongside their other responsibilities as students such as those found in the Student Charter 2020.

How do I explain generative AI to my students?

AI tools will become part of every workplace and we want our students to be those who master the technology. They will need to learn how to build on the work produced by AI.

Our students have worked with us to develop a fantastic resource, AI in Education, which describes what generative AI is, how to use it safely and responsibly, and a wide variety of prompts that help students to learn better. Please do share this with your own students using this short link: https://bit.ly/students-ai

Visit the AI in Education student resource

What should I do about my assessments?

The article What to do about assessments if we can’t out-design or out-run AI? is a good start. We will need to move to a place where we simultaneously have assessments that do assure learning (and some of these may well be pen-and-paper exams, but hopefully also include other more authentic and secured assessment), and assessments that motivate and inspire learning.

How can I design an assessment that is AI-proof?

The short answer is, you can’t. Given the linguistic and other powers of generative AI, and the rate at which it is developing, it is not possible to design an unsecured assessment that is completely ‘AI-proof’. Suggestions such as having students refer to class discussions or contemporary sources, or including details of their own environments or experiences, will help somewhat but students could still generate text using AI and simply insert these details manually. The articles linked in the above question offers alternative suggestions on approaching assessments in the context of generative AI being widely available.

Can I use one of those AI text detectors if I suspect students are using ChatGPT inappropriately?

No. There are significant unresolved privacy issues with these third party tools. If you suspect that a student may have used generative AI to complete an assessment task inappropriately, the unit coordinator should be notified and they will lodge a case with the Office of Educational Integrity. This case will then be reviewed independently, and a similar approach to investigating cases of contract cheating will be taken.

Are there equity issues around using these tools?

We cannot mandate the use of generative AI in assessments, unless access for students and staff is guaranteed and provisioned by the University. ChatGPT, for example, is likely to continue to have a free access tier in addition to introducing a paid subscription model – although the free tier does not have guaranteed access and may become unavailable unexpectedly during peak times. If you wish for students to optionally critique or otherwise draw on ChatGPT output as part of an assessment, you could consider generating some outputs yourself and making these available to students on Canvas so that they don’t need to do this themselves.

Guidelines to provide students around use and acknowledgement of generative AI

Do you have any examples of guidelines for students that I can use in my units?

The first port of call should be the Academic Integrity Policy 2022. Following this, here is a set of points that you may wish to adapt for your own contexts.

You are permitted to use generative AI to help you <insert learning activity and benefit>. For example, you may want to prompt the tool to <provide some specific examples>.

If you use these tools, you must include a short footnote explaining what you used the tool for, and the prompts that you used. Such a footnote is not included in the word count.

Don’t post confidential, private, personal, or otherwise sensitive information into these tools. Their privacy policies can be a bit murky.

Your assessment submission must not be taken directly from the output of these tools.

If you use these tools, you must be aware of their limitations, biases, and propensity for fabrication.

Ultimately, you are 100% responsible for your assessment submission.

Some staff have elected to use a shorter form statement, such as:

ChatGPT and similar generative artificial intelligence technologies have many potential uses and functions, not all of which are prohibited. Use of such technology is governed by University policy and any specific instructions for a given assessment. Students must ensure they are familiar with these policies and requirements. As a typical example, an allowed use of these technologies might be to help improve the grammar of your draft assignment. A disallowed use of these technologies would be to draft the entire content of a report that you are required to write yourself.

The Syllabus Resources from the Sentient Syllabus Project also contain some text that you might consider for inspiration.

How can students ‘reference’ their use of AI?

Because the output of generative AI is not typically a source that another person can reliably locate themselves, it is probably not advisable to recommend ‘referencing’ or ‘citing’ these outputs in the same way as with other sources such as journal articles or webpages. Instead, the approach to take is to ‘acknowledge’ the use of generative AI. We have provided guidance for students and staff on the AI in Education site.

Implications of different AI technologies

Is it just ChatGPT that I need to know about?

ChatGPT is just the tool that has received the most attention (having gained over 100 million active users within 2 months of its release). However, there are a rapidly growing set of AI-enabled tools available online for generating all forms of writing, as well as multimedia artefacts including images, video, music, vocals, and more. Not all of these may be applicable to your assessment(s), but it is worthwhile building awareness of the AI tools that your students may be using. It is worth noting also that the quality of AI tools is improving rapidly, and limitations (such as factuality, accuracy, ability to reference sources, etc) will continue to be overcome.

I’ve heard that ChatGPT takes your data. What can I do about this?

You can request that OpenAI, the company behind ChatGPT, to not use your inputs for training its AI. To do so, you can opt-out of having your data used by them. It would be useful to inform your students about this as well.

Working together to productively and responsibly engage with generative AI in education

Where can I find out more about this?

We held two all-staff forums on this recently – University of Sydney staff can access the recording of one of these forums. We have also held two illuminating student panels with insights into how generative AI might affect students’ assessments, feedback, study, groupwork, and future. We also have a set of resources available on AI and education at Sydney.

What are some examples of how academics are using AI in their teaching and assessment?

Check out these vignettes of how academics at Sydney are helping students productively and responsibly engage with AI in their studies:

- How Sydney academics are using generative AI this semester in assessments

- How Sydney academics are using generative AI this semester in class

I have an example of how I’m using generative AI in my unit

We would love to hear about this. Please email Danny Liu.

Who can I talk to for more advice?

If you’d like to discuss assessment and teaching design, please reach out to Educational Innovation via our design consultations – you can book a time that suits you: https://bit.ly/ei-consults. For matters of policy, please consult your Associate Dean Education. If you would like Educational Innovation to speak to your school, department, or Faculty, please get in touch with Danny Liu.