Our approach to ChatGPT and other generative artificial intelligence (AI) at the University of Sydney is one of productive and ethical engagement. Whilst recognising the need to safeguard academic integrity, we want to help prepare students to be ethical leaders in a future where AI provides new and exciting possibilities.

In the second part of this series, we spoke with academics who are experimenting with ChatGPT in assessments. From using it as an early research tool to improving student writing, and from supporting creativity to completely wrapping an assignment around ChatGPT output, these examples will inspire you to consider how we can tweak or change assessments to help our students prepare for a future where AI will be pervasive.

As a research partner

Jan Slapeta, a professor in the Sydney School of Veterinary Science, uses ChatGPT in capstone Research & Enquiry units where students work on a research project of their choosing. “While researching their area of interest, I am encouraging students to use large language models, such as ChatGPT, to help with enquiry such as summarising research and creating outlines,” he says. Jan considers it as another way for students to get started answering their research questions:

Working with ChatGPT, you have a relentless assistant that you can fire prompts at.

Jan sees AI as an integral part of the future of the discipline and more broadly. “Simply one has to,” he says. “Reading how AI or augmented reality is saving lives and improving decision making in clinical medicine in recent years – this is where the future is heading.” He is also realistic about how students are already using it. “I have been in a prac today and one student was ChatGPT-ing during the prac. The tool is here, no need to pretend it is not.”

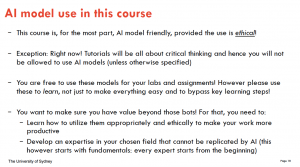

To help guide students’ use of these generative AI tools, he holds a brief discussion with a few simple slides (which he is happy to share), which include ‘in-house’ rules that are aligned with the University’s Academic Integrity Policy 2022. As part of these rules, he reminds students of the importance of using good prompts, that ChatGPT can make things up, and that students need to acknowledge and specify its use and be responsible for its outputs.

An assistant in analysing texts

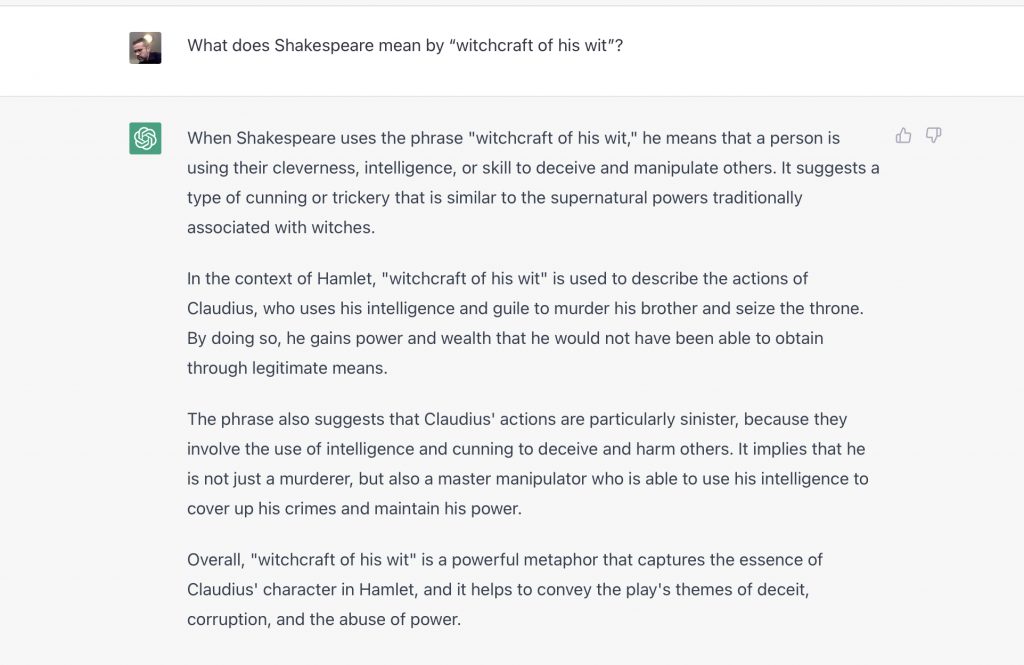

Huw Griffiths, an associate professor in the Discipline of English, coordinates a third-year undergraduate unit on Shakespeare. Here, he invites students to see how ChatGPT can help with – or hinder – textual analysis. “To prepare my students, I’ve compared ChatGPT’s responses to what a specific metaphor (such as ‘resolve itself into a dew’ from Hamlet) might mean to a literary critic’s investigation of the same language,” he says. “In students’ work, they choose their own metaphor and consider the affordances of ChatGPT: what it gets right but also what it might miss out or even get wrong.” They also need to bring in references from known reliable sources.

This use of ChatGPT aligns with the learning outcomes for the unit, which include developing an understanding of key aspects of Shakespeare’s dramatic language, and producing evidence-based arguments and independent interpretations of the language. Huw sees AI as another tool that helps students hone their analytical skills. “I think that it will enable them to see that ChatGPT (and other forms of generative AI) are just one form of knowledge production amongst many,” he says. “They will be able to reflect on the distinctive contributions of ChatGPT but also on the distinctive contributions of individual literary critics (including themselves), understanding a little more about how, in different forms of knowledge production, evidence might relate to argument in distinct ways.”

His students are engaging eagerly with this assignment, and asking about the nuances of the responses that ChatGPT is providing. Like other academics, Huw is deeply conscious of his responsibility to help students engage productively and ethically with AI. “Generative AI is going to be part of the lives of all of our students,” he says. “There is no job that they will go on to do – teacher; public servant; lawyer; writer; business analyst; medic etc etc etc – that will not find some use for ChatGPT or something similar.”

It is, I think, incumbent on us as educators to start thinking critically and philosophically with our students about its utility, but also about what it means for how knowledge is produced and disseminated.

Supporting creativity and stakeholder interactions

Hamish Fernando, a lecturer in the Faculty of Engineering, encourages students to use ChatGPT in his second-year units on AI, Data, and Society in Health. Here, ChatGPT helps students with creative ideas for assignments and to overcome writer’s block. Hamish is careful to provide students with guardrails, though. “I have given clear guidelines on how to use AI to maximise its benefit, as well as weekly goalposts for them to reach over the course of their assignment.”

Part of one of the assessments is to reach out to stakeholders from healthcare organisations to conduct an interview. Hamish recognises that many students in his cohort do not have experience in this kind of an activity, so he encourages his students to “use ChatGPT to assist with composing interview request emails and designing interview questions for specific stakeholders”. The reason, he says, is because this helps students build AI literacy for the future world of work. “I have made it clear that they need to maximise their productivity using AI, because in future, it is likely that most people/organisations would be using them. If they want to remain competitive, they need to become savvy with AI use and maximise the benefits they could gain from these.”

Hamish also encourages students to use it as a study partner for the programming components of his units, because student abilities vary greatly. Early hits to students’ feeling of confidence can have lingering impacts on their engagement, especially if they can’t keep up with students who have more experience. He provides guidelines for engaging with generative AI to help students develop a strong moral compass for these tools:

I want students to learn how best to use AI in an ethical manner, in order to enhance their productivity and also for them to compete successfully in future job markets in which AI would likely be commonplace.

However, he also understands that maintaining critical thinking skills are essential, so he has made specific components of his course, such as tutorials, “ChatGPT-free” classes. This is because the tutorials cover activities that involve critical and creative thinking which are specifically designed to be collaborative among student groups, not AI.

Unpacking ideas and increasing exposure to literature

In the postgraduate unit that I (Danny) coordinate with Sam Clarke for in-service university-level educators as part of the Sydney School of Education and Social Work, we are encouraging the use of different types of AI to support students in unpacking new ideas and looking further into unfamiliar literature. Our students are mostly well-versed in academic writing and literature searching in their own disciplines, but the discipline of education presents a new challenge. In all of our assignments, we demonstrate to students in class how tools like ChatGPT and Bing Chat can help them to explore ideas around core topics.

For example, students might want to ask ChatGPT, “Give me 10 reasons why students are disengaged in my large cohort lectures in introductory chemistry with a diverse non-major cohort”, and then draw inspiration from a few of the ideas generated. We also demonstrate tools such as Research Rabbit and Elicit, which are specifically designed to help people explore published papers and other resources. These will help our students to learn new AI-enabled methods of literature searching, which they can use for their assignments when needing to write summaries of existing literature around a concept, and when integrating scholarship to support their arguments.

Knowing how to engage productively with different AI tools will help our students more deeply interrogate issues and scholarship, while saving them time now and into the future.

We provide firm guidelines (see an example here) to support students’ engagement with AI, providing them suggestions on why they might like to use AI, how to acknowledge its use, warnings around its limitations, and guardrails around using the outputs of these tools directly in their submissions.

Improving student writing and language learning

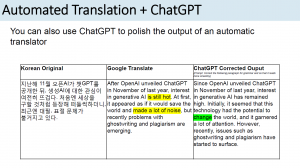

Benoit Berthelier, a lecturer in the Department of Korean Studies, encourages students to use ChatGPT in his third-year Contemporary Korean Society and Culture unit to improve their writing. “My students come from very different, often non-humanities backgrounds, and don’t have much experience writing essays, so many struggle with writing. They also make heavy use of automated translation,” he says.

He spends some time in class to explain simply how large language models work, their limitations and, importantly, how students might want to use it. Benoit has generously provided his slidedeck for Teaching@Sydney readers, and in it there are great examples of how students might use ChatGPT to improve something they have already written, draft a paragraph from scattered thoughts, or function as a basic translator. “I feel like it can help students do better academically and later professionally. I think it’s a useful skill for students to have even if they don’t have a technical background. I’ve been working with large language models for my research for a few years now so I can connect our use of ChatGPT for writing to my research topics and other issues we discuss in class,” he says.

Assessing the process of learning through improving ChatGPT’s outputs

Martin Brown, a senior lecturer in the Faculty of Medicine and Health and coordinator of a Contemporary Medical Challenges unit, is experimenting with an assignment that is fully wrapped around ChatGPT. The unit’s learning outcomes include being able to explain and communicate medical science research and applying evidence from different sources. His ‘ChatGPT Essay’ assignment fits these perfectly. “We ask our students to come up with a prompt question consistent with the name of the unit (Contemporary Medical Challenges) and get a response from ChatGPT of around 500 words. We then ask them to reflect on it (What is correct? What is incorrect? What don’t you know is correct or incorrect? What should you look up elsewhere to verify? What should you ask ChatGPT next?),” he says.

After critically evaluating ChatGPT’s response, students need to edit it in tracked changes mode, and submit the prompt question, ChatGPT’s response, and their improved response. This is an interesting example of assessing the process and not the product – by developing a relevant prompt and improving ChatGPT’s response, students can demonstrate their learning around a contemporary medical challenge. The rubric, which Martin has generously provided along with the assignment description, evaluates students on how relevant and considered their prompt is, and how well-researched and insightful their edits are. Students’ thinking processes are made visible through these, and it encourages critical engagement with the unit concepts.

“We see ChatGPT as a useful way of collating and summarising extant data on a topic,” Martin says.

As Pandora’s Box is open, we may as well get used to using it for what it’s good for, and honing in on what humans are good for, which we hope involves sentience, judgement and informed, creative speculation about the future.

Generating and analysing ChatGPT essays to discover what it means to be human

Ben Miller, a lecturer in the Faculty of Arts and Social Sciences, coordinates a large first-year undergraduate unit on Writing and Rhetoric. This semester, he has designed a new groupwork task where students will analyse a ChatGPT-generated essay. Students can choose whether to generate the essay themselves, or use one that Ben has provided. The unit’s learning outcomes include demonstrating how to construct effective arguments for multicultural audiences, communicating competently, and editing the work of others effectively.

The new assignment addresses these learning outcomes well: “Their analysis will focus on three things: questioning authorship regarding AI-generated essays; evaluating whether AI helps students achieve high levels of critical thinking (such as evaluation or argumentation); and, recommending to peers how ChatGPT can be used achieve the goals of higher education,” Ben says. The assessment task does this by encouraging peer conversations around what makes an effective essay, thereby developing students’ skills in evaluation and argumentation. “But there are also broader outcomes that relate to digital and AI literacy. I hope that students will gain a greater understanding of the ethics of using AI, and that students will discover skills they have that exceed the potential of AI.”

… students have commented that they are looking forward to thinking about how to use AI, rather than being told they simply can’t or shouldn’t use it.

Ben’s students have been excited to engage with this cutting-edge technology and approach to learning. Student groups will present a critical reflection on their opinions about authorship of AI-augmented pieces, the development of critical thinking in themselves, and recommendations about how AI writing tools may help students learn into the future. He has provided the assessment task and a rubric for Teaching@Sydney readers to see – the rubric, for a final reflective statement, encourages students to consider how AI can support writers, integrating scholarly sources.

Like the others, Ben sees ChatGPT as part of the landscape in which students will find themselves in the future. “AI such as ChatGPT will inevitably be a part of the professional and everyday lives of students and graduates,” he says. “If I can frame discussions about, and activities with, AI in ways that deepen my students’ understanding of critical thinking and writing, then I am helping students understand what skills they take into the workplace that can’t be replicated by AI.”

Beyond vocational outcomes, the conversation about how students can exceed the capacity of AI helps us understand what it means to be human – a core concept in the study of humanities!

As we move towards a future where AI is pervasive, focusing on what humans can uniquely do will be a key learning goal for our assessments. Doing so will encourage our students at Sydney to develop into the ethical and creative leaders of tomorrow.

Tell me more!

- Check out our curated set of resources for artificial intelligence in education at Sydney

- See how our academics are using generative AI in class

- Do you have an example of use that you would like to share? Please get in touch with [email protected]

2 Comments