The internet has exploded again, this time with the release of GPT-4 on Pi day, 2023. But what is this next generation AI, and what impact might it have for higher education?

Just tell me how to get my hands on it!

GPT-4 is a much-improved model compared to GPT-3.5, which is the large language model that powers the now infamous ChatGPT. OpenAI, the company behind ChatGPT, GPT-3.5, and now GPT-4, calls GPT-4 a ‘large multimodal model’, because it is able to take image and text inputs, and respond through text. Currently, the image input is not available publicly – yet.

To send text input to GPT-4 and see its improved responses, there are currently two ways. One is by having a ChatGPT Plus subscription, which costs USD20 per month. A free approach to using GPT-4 is through Bing Chat, Microsoft’s AI-powered chatbot released on 7 February 2023 that was rumoured (and now confirmed) to be powered by GPT-4. Bing Chat is still in limited public release, and there is a waiting list to get onto it (it took me about two weeks to get access). Bing Chat has the added advantage of having live access to the internet, so it is less prone to hallucination (the tendency of language models to make things up).

What’s so special about GPT-4 compared to previous versions?

OpenAI has released a technical report alongside GPT-4’s release, which describes how GPT-4 is “less capable than humans in many real-world scenarios, [but] exhibits human-level performance on various professional and academic benchmarks”. Perhaps most strikingly, GPT-4 appears to be much better at reasoning and understanding complex scenarios. The report claims that GPT-4 performs in the top decile of human test-takers on a simulated bar exam, whilst GPT-3.5 (the current model behind the free version of ChatGPT) scores in the bottom decile. OpenAI’s GPT-4 release webinar showed GPT-4 calculating tax liabilities given a complex set of tax legislation and a scenario (skip to 19:03 in the recording), demonstrating its ability to reason through complex tasks.

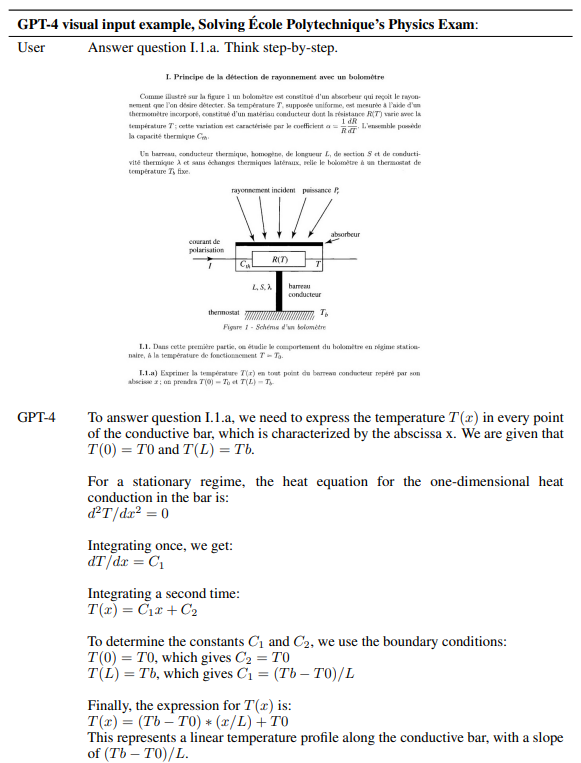

Because GPT-4 takes multimodal input, the report states that it scored in the top percentile in a Biology Olympiad exam which has many image-based questions. The report also has striking examples of GPT-4 answering questions that involved interpretation of complex graphical inputs such as charts, and a university-level physics exam question on heat transfer in a conductive bar. OpenAI demonstrated how GPT-4 could take a photograph of a hand-drawn app interface and turn it into functional code within seconds (skip to 16:17 in the webinar recording).

GPT-4 is also meant to have the ability to process up to 32,000 ‘tokens’ at once (a token is about 0.75 of a word in English, so about 50 pages’ worth of text), compared to GPT-3.5 whose limit was about 8,000 tokens, although currently this is only available to programmers via the GPT-4 application programming interface. This means that GPT-4 can consume and generate dissertation-length writing. GPT-4 also has improved ‘steerability’, which means that users and programmers will be able to ask it to assume particular roles – OpenAI’s GPT-4 launch webpage has a striking example of GPT-4 being steered to act as a Socratic tutor, guiding students through a question with questions, rather than providing the answer.

What can GPT-4 actually do in practice?

In the few hours since its public release, people have already been using it to code up web applications from scratch, perform drug discovery, explain financial transactions, and generate ‘one-click lawsuits’. On the academic side of things, GPT-4 is being integrated with Elicit, an AI research assistant that rapidly analyses literature and helps you look across papers to discover shared concepts and answer your research questions. Khan Academy is developing Khanmigo, an AI-powered “tutor for learners”, which uses GPT-4 to personally guide students through completing questions. Duolingo, the language learning app, is using GPT-4 to allow language learners to practise conversation skills and get explanations for mishaps. It’s possible that AI is sufficiently advanced now to start addressing the ‘2 sigma problem‘, where individualised teaching tailored to the needs of each student may be possible at scale.

More immediately, Ethan Mollick, a professor at the Wharton School, has already been using GPT-4 (through Bing Chat) for a few weeks, demonstrating that GPT-4 can be creative, drawing meaningful connections between disparate ideas. Mollick also shows that GPT-4 can apply theory to practice, across different domains and generating novel insights, in fields such as marketing, research, academia, and consulting. OpenAI’s GPT-4 technical paper cites examples of the AI answering multiple choice questions from 57 different subjects with 86% accuracy.

What are its limitations?

OpenAI is careful to point out limitations of GPT-4, saying in its technical paper that “it is not fully reliable (e.g. can suffer from ‘hallucinations’), has a limited context window, and does not learn from experience. Care should be taken when using the outputs of GPT-4, particularly in contexts where reliability is important“. That said, OpenAI claims that GPT-4’s factuality is 40% better than GPT-3.5. Additionally, GPT-4’s training data consists of web texts and other material available before September 2021 – this is similar to GPT-3.5. However, Bing Chat (powered by GPT-4) is internet-connected and does not have this limitation, which seems to reduce the propensity to hallucinate.

GPT-4’s developers have also implemented safety measures that prevent it from generating harmful responses. The system card for GPT-4 illuminates a number of mitigations that prevent the AI from responding to prompts that elicit biased, offensive, criminal, unsafe, or other inappropriate content. (Incidentally, pages 15 and 16 of the system card have somewhat terrifying examples of what the AI was capable of when allowed to interact with the real world).

One non-functional limitation of GPT-4 is the lack of transparency around its training data. OpenAI states in its technical paper that, “[g]iven both the competitive landscape and the safety implications of large-scale models like GPT-4, this report contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar”.

What does this all mean for higher education?

Honestly, we don’t have all the answers yet. However, it’s clear that generative AI is advancing at an incredible pace (there are rumours that GPT-5 is already being trained) and will revolutionise the world of work in ways that may not yet be known. Sydney’s fundamental approach of productive and responsible engagement with AI in learning, teaching, and assessment remains unchanged – and the need for this has been reinforced through conversations with students.

In the short-medium term, the increased abilities for GPT-4 (and Bing Chat, and other AI tools that will inevitably be released) to be creative, solve problems, generate hypotheticals, interpet images, and draw information from the internet (as Bing Chat can do) make some existing advice somewhat outdated – even after less than a month. Updating written assignments to focus on creative thinking and problem solving and refer to contemporary sources and events will not necessarily make them AI-proof anymore.

Most of our previous advice for assessment in the age of generative AI has been around building students’ AI literacy, leveraging it to personalise assessment tasks, trying multimodal assessments, and focusing on the process and not the product. This advice, thankfully, still applies even with the release of GPT-4. Indeed, we are seeing a number of Sydney academics integrating AI into assessments in creative ways, leveraging AI’s abilities to summarise, suggest, search, and save time.

GPT-4’s release perhaps puts more traditional assessments at increased risk, encouraging us to more urgently consider how we can help students productively and responsibly engage with AI and how we can update our assessment practices to refocus on the process and joy of learning.

Tell me more!

- Check out our curated resources for engaging with AI at Sydney

10 Comments