Generative artificial intelligence (AI) tools such as ChatGPT are powerful aids for writing and analysis. As we understand their potential for improving teaching and learning, several principles are emerging. The National AI Ethics Principles include requirements that there should be (i) transparency and responsible disclosure on the use of AI, (ii) upholding of privacy rights and data protection and (iii) human accountability and oversight of any AI assisted outcomes.

The potential of AI to improve the quality and timeliness of feedback for students is clear but it is important that we also consider how any such use is within our existing policies and consistent with the emerging principles. Taking a deep breath now will help to mitigate any possible distrust amongst staff or students, as we continue to develop our understanding of the reliability, desirability and ethics of using AI for such traditionally fundamental student-educator interactions as grading and providing guidance through feedback. Through conversations with educators, students, and AI education and policy experts in our working groups and governance frameworks, the following guidelines have been agreed by the University Executive Education Committee to apply University-wide effective immediately.

- All use of AI by staff in teaching, learning, and assessment must be clearly and transparently communicated to students.

- Any submission of student work to AI for the purposes of providing feedback or formative marks/grades must:

- Only be to third party technologies that have been approved through our internal governance framework*;

- Be explicitly communicated to students in writing. Students need to give informed consent (which needs to be recorded) and any real or perceived coercion must be avoided (if students decline, this decision must have no impact on the determinations of their grades or the quality of feedback, and this must be clearly communicated to students before their decision;

- Comply with all policies on privacy and data protection, including security around research or commercially sensitive work; and

- Where possible, not be retained by the AI tool provider beyond what is necessary to provide the AI services.

- For assessments that count towards the final grade (‘summative assessment’):

- Marks and grades must not be derived or determined using AI at any stage of these assessments;

- AI must not be used to determine the first instance of feedback comments – this must be determined by human markers. AI can assist in improving feedback determined or written by markers (e.g. correcting grammar/spelling errors, refining wording), or suggest additional feedback, provided it is part of an approved technology*;

- Human markers are 100% responsible for the accuracy, validity, and quality of all marks, grades, and feedback.

- For assessments that do not count towards the final grade (‘formative assessment’):

- AI can be used to generate marks, grades, and feedback as long as this is clearly communicated to students and provided it is part of an approved technology; and

- AI generated marks, grades and feedback must be regularly reviewed by human markers to ensure validity, fairness, and beneficence.

* At Sydney, learning technologies must comply with the Learning and Teaching Policy 2019 (section 24) which includes a requirement that those used for assessment must have the approval of the Deputy Vice-Chancellor (Education). The currently approved list of technologies are listed on the intranet. Approval of third party technologies for education, including generative AI technologies, are assessed by the eTools review committee.

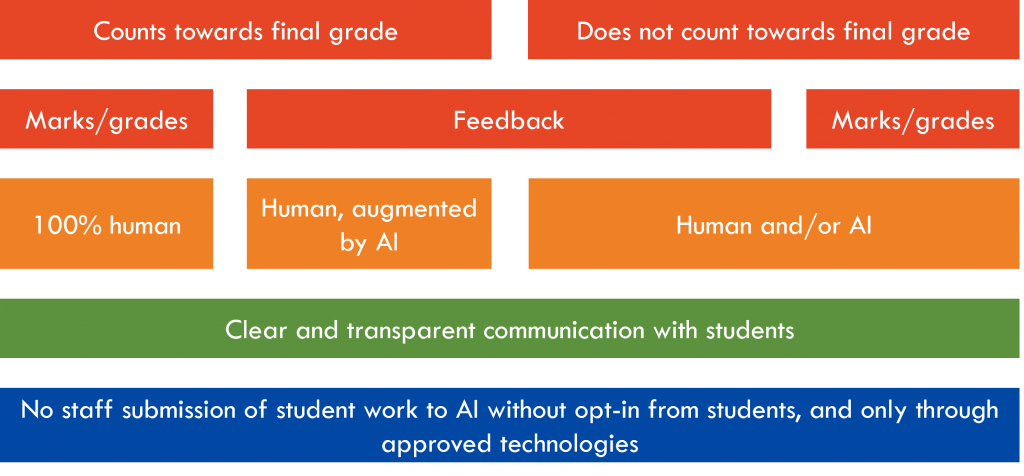

This handy graphic summarises the above guidelines and is designed to be read vertically.

As we develop our understanding of how AI can and should be used, and AI tools become integral parts of our technology ecosystem, these guidelines will evolve and ultimately may become redundant. Modelling the behaviour we expect from students in the ways in which AI is used, however, is a principle that we must continue to adhere to. The student members of the AI in Education Working Group stressed how essential this to maintain trust and integrity. They also noted the importance of educators modelling best practice when acknowledging and citing the use of resources such as images in teaching materials.

4 Comments