Generative artificial intelligence (AI) tools, like ChatGPT are generative in that they create something new by using machine learning algorithms to make outputs that are similar to material they have been trained on – not repeating inputs exactly; the outputs are unique and original. This capability has been a hot topic in higher education, with many discussions focused on student use of AI, in particular the responsible use of artificial intelligence by students in higher education. However, the potential for AI to streamline tasks for teaching staff, and enhance the learning process is equally compelling, particularly in helping us to implement early feedback tasks as outlined in the support for students policy.

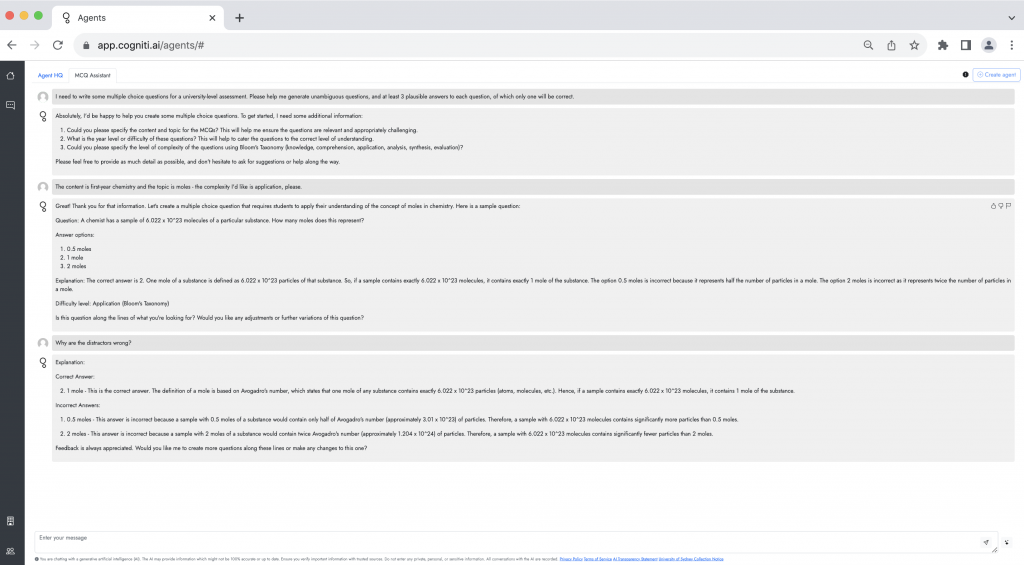

Cogniti, a generative AI tool developed at the University of Sydney, is powered by GPT-4 but keeps content away from OpenAI. Sydney educators are investigating ways of using this tool as a way of supporting teaching and learning, freeing up more time for teachers to focus on their students. Knowing the significant time cost of creating multiple choice questions, we have used Cogniti to build a multiple choice question (MCQ) quiz assistant that has been trained on principles of writing high quality multiple choice questions (such as giving meaningful feedback on common misconceptions, and expanding on why correct answers are correct)

Our assistant will help you:

- Reduce the time spent writing good multiple choice questions

- Consistently apply principles of writing multiple choice questions

- Create a variety of multiple choice questions for your question bank

In supporting students to:

- Retain their learning

- Recall information that they have previously forgotten

- Transfer their knowledge to new problems

While the MCQ assistant in Cogniti has been trained on principles of writing multiple choice questions, your critical thinking skills and knowledge are indispensible in scrutinising the AI-generated outputs. This is to ensure that the final product is pedagogically sound and appropriately discusses the subject matter; human accountability and oversight for AI-assisted outcomes is important as AI tools can be very good at sounding authoritative, even when they’re wrong.

Using the MCQ assistant

Begin by telling the MCQ assistant details about the content and topic of your course material that you wish to test. As a general principle, experiment with different prompts and vary the level of Bloom’s taxonomy of learning outcomes when asking for questions.

When prompting the MCQ assistant, it’s helpful to focus on one concept or subtopic at a time. This focus primes the working memory of the AI for that specific area. You can also ask the MCQ assistant for more than one question on the same concept to expand a question bank. The assistant will generate a correct answer (but please verify this using your expertise!) and then a set of plausible distractors. Asking it an additional question, something like ‘Why are your distractors plausible?’, will help you generate specific feedback for students based on their answer choice touching on common reasons why they make have selected an incorrect answer. You can also ask for detailed feedback on the correct answer, which will help to solidify your students’ learning. In this way, cyclical interaction with the MCQ assistant focused on one topic will allow you to refine and improve its responses.

Begin a new conversation with the assistant if the previous topic’s conversation was lengthy, again to help the assistant’s capacity (working memory). When transferring the AI generated questions to your question bank, ensure you have separated the questions that you have evaluated for subject matter accuracy and pedagogical soundness from the assistant’s raw content. If you leave the assistant, such as to begin a new conversation on a different topic, you can look back on any past conversations.

Respondus can be used on Microsoft Windows to import a .CSV question bank to Canvas – contact Educational Innovation if you’re interested in using Respondus. Keeping the Bloom’s taxonomy level with your questions in your Word document question bank is recommended. Recording the taxonomy level with the question, answers and detailed explanations of the answers allows you to construct a comprehensive quiz, setting your students up to being able to work at varying cognitive levels.

Find out more

- Join an MCQ for EFT workshop. These workshops are aimed at unit coordinators of 1000-level units and will take you through accessing and prompting Cogniti. The workshop slides also hold more information about resources and further support.

- Join a Cogniti workshop to learn more about the tool and start building your own agents.

- Read other Teaching@Sydney articles about generative AI and Cogniti.