What if there was a way to make assessments more engaging, reduce marking time, enhance academic integrity, and assess unit or course learning outcomes effectively? Danielle Logan-Fleming and Popi Sotiriadou from Griffith University have seen this work around the world and in many disciplines through the interactive oral (IO) assessment. They recently shared their expertise in a series of workshops aimed at supporting quality oral assessment tasks at Sydney.

Aren’t interactive oral assessments just oral exams?

Interactive oral assessments are authentic, scenario-based conversations where students can extend and synthesise their knowledge to demonstrate and apply concepts.

Importantly, an IO assessment is not a question and answer interaction, not a scripted conversation, not a viva voce, not a presentation, nor an OSCE. As each student engages with the scenario, the conversation will unfold in a unique way, allowing the educator to personalise the assessment experience. Supported with a marking rubric, the assessment is standards-based and mapped against the course or unit outcomes.

In one example demonstrated by Dani and Popi, a student studying the hospitality industry arrived at an IO ready to discuss options for a hotel chain’s breakfast offerings. Previous assessments and learning activities had helped students develop expertise on the hotel chain’s clientele, food and beverage offerings, and industry trends. The student then participated in the IO as an employee of the hotel arriving at a meeting to discuss breakfast offerings (buffet, a la carte, etc). The marker acted as a hotel manager and adapted the conversation to listen to the proposal, check for skills, and query information in a way that gave the student good opportunities to achieve high standards against a marking rubric.

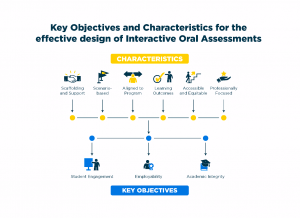

Dani and Popi advise that there are six key components to the IO that ensure the assessment works; they advise this formula is closely followed when designing IO assessments.

- Scaffolding and support: consider where in your unit you can introduce opportunities for students to practise the skills they will need for an IO assessment. These practice activities could focus on the topic of the assessment or the practice of a conversation itself. Some students will be unfamiliar with having to speak out loud about their ideas, having always been assessed in writing, so it is important to make sure students have practice speaking and using the language of the discipline. It’s also important to give students an example of an IO (that you create) and have them mark the example IO on a rubric.

- Scenario-based: One of the exciting things about the IO is the opportunity for creativity in how you design the assessment. The scenario allows you to pick a context that is appropriate to your discipline and the level of expertise of your students. The scenario could be prescriptive or give leeway for students to select the topic or the focus of the assessment.

- Aligned to program: consider how the IO is aligned to the rest of the program and how it can allow students to demonstrate how they extend and synthesise their knowledge.

- Learning outcomes: the IO conversations are shaped by the learning outcomes it seeks to assess. These outcomes define the criteria in the rubric, which in term informs the prompts used by educators as they shape the conversation during the IO. These prompts are not pre-scripted questions, but rather sentence-starters that encourage students to address the criteria for the outcomes that the IO seeks to assess.

- Accessible and equitable: IO assessments are marked according to the rubric, which should not usually include criteria on communication quality (unless this is a specific unit learning outcome). The educator’s role is to drive the authentic conversation and try to unpack what the student knows in a supportive way. These contribute to IOs being more accessible and equitable.

- Professionally-focused: one of the cornerstones of the IO is the focus on professionally authentic conversations. The scenario helps students and educators place themselves into a role that allows access to the structure and language appropriate for the IO. For example, an education IO assessment might simulate a parent-teacher interaction, an architecture IO assessment might have students defending their work to a potential client, a law IO assessment might have students being asked to provide advice to a client, and an IO for language learning could have students being asked to act as guides to their city of choice.

Surely interactive orals can’t work for large cohorts?

According to Dani and Popi, the IO scales exceptionally well. You can have tutors or coordinators taking on the assessment, much like you would distribute marking normally. Dani and Popi estimate that a 10-15 minute IO conversation effectively assesses the equivalent of a 3,000 word text-based submission. They recommend allowing for some buffer time, e.g. 20 minutes per student as you start out and then 15 per student as you get more comfortable. Even with a more generous allowance for a 5-10 minute buffer, the per-student time commitment is still significantly less than marking and providing individual feedback on a 3,000 word essay.

Plus, educators who have given IO assessments say that they actually enjoy the marking, and are able to much more clearly understand student knowledge (and gaps).

You could consider running the IO during timetabled class sessions, or allowing markers to nominate a schedule and arrange for students to book in for available time slots. Some designs intentionally keep tutors and students matched while other designs encourage tutors to assess students they don’t teach in their own classes.

Marking is variable and it’s hard to keep it fair and well-calibrated

The key is to remember that you are not just having a random conversation, nor are you peppering your students with set questions. The educator will use a rubric to prompt students, gently nudging them towards their potential to meet a higher criteria. For example, if your student has described an observation (if ‘description’ were aligned to a credit grade), the prompt might be “tell me more about what made you come to this conclusion”, opening the door to analysis (if ‘analysis’ were aligned to a distinction grade). The role of the assessor is to actively listen and use the rubric to prompt students to extend their response.

Interactive oral assessments can’t be organised at scale

Booking tools like Microsoft Bookings can be helpful here. The tool allows you to set up an online booking system where you determine the available time slots for each of your assessors, and students are able to select an assessment time that works with their schedule.

To run the sessions, you may wish to run them online or in person. If you are considering running them online, we recommend using Zoom, creating meetings for your tutors or asking them to take responsibility for creating their own links. Don’t forget Zoom has some limitations for how many concurrent sessions you can run so if as the coordinator you are setting up Zoom links for your tutors reach out to Educational Innovation for some help. Also, AI software exists now that can respond to online video-based interviews in a deepfake live video feed, so be aware that online assessments are not secure.

You’ll need a rubric to make the IO work properly. You can set up your rubric in Canvas or SRES based on what you currently use for your other assessments. If you do not currently use a rubric we recommend joining one of the upcoming sessions on rubrics and Canvas.

These assessments disadvantage students

Equity and access are key concerns that IOs address, according to Dani and Popi. While usual University adjustments and support remain available to students, which might allow extra time, pre-recording, a support person, etc, key aspects of IOs contribute to improved relevance, motivation and opportunity. The scaffolded nature of assessments and activities in the lead-up to an IO, and the provision of exemplars and demonstrations, enable students to build expertise and familiarity with the task. Further, because the task replicates workplace or ‘real-world’ scenarios, there is a heightened sense of relevance, which motivates student participation.

Ready to introduce interactive oral assessments into your unit?

We would love to work with you and your unit as you progress with the design. We have academic developers and educational designers ready to help. If there is any support that you need, even if it’s just a conversation on how things are going, please reach out to us. Interactive oral assessments are fairly new to the University so we are keen to learn together and help share practice across the institution. Please get in touch witih [email protected].

Tell me more!

Check out Dani and Popi’s excellent resources on interactive oral assessments:

1 Comment