The 6,700 unit of study availabilities in 2024 each had an average of 4.4 assessments. Students took around 30,000 individual assessments and there were over 2.2 million assessment submissions.

Assessment drives learning and fits into three broad, overlapping purposes:

- by allowing students to receive feedback on their work that they can act on to improve (assessment for learning),

- by being part of their learning to develop them as independent learners (assessment as learning) and

- by determining and assuring that learning has taken place (assessment of learning).

The assessment volumes quoted above, and the associated student and staff effort, reflect the importance we place on assessment. The form and style of our assessments reflect the learning outcomes, and the teaching and learning activities and tasks that we think are important. Constructive alignment across units and beyond units should then ensure the right balance of the assessment purposes listed above and of the student and staff effort.

Our assessment principles summarise the roles played by assessment across our programs and the ways they should be implemented to ensure validity, integrity, fairness, and relevance to the communities we serve:

- Assessment practices must promote learning, evaluate outcomes, and facilitate reflection and judgement.

- Assessment practices must be clearly communicated.

- Assessment practices must be inclusive, valid and fair.

- Assessment practices must be regularly reviewed.

- Assessment practices must be integrated into program design.

- Assessment practices must develop contemporary capabilities in a trustworthy way.

The emergence of generative AI tools that can mimic creative human outputs such as written work, images, video and sound require us to ensure that our courses and assessments equip students with the skills needed to effectively use these technologies in ways which are ethical and ensure that we can reliably and practically assure the integrity of our award programs.

The new Sydney Assessment Framework categorises each assessment according (i) to their role as assessment of, for or as learning and (ii) how they are delivered and adjustments are applied. As shown in Table 1, it aligns with the ‘two-lane approach’ to assessment in the age of generative AI through the appropriate use of ‘secure’ assessments where the use of AI can be controlled (Lane 1), and the development disciplinary knowledge, skills, and dispositions alongside AI through ‘open’ assessments (Lane 2). This categorisation aims to cover all assessments at Sydney.

It is designed to ensure that constructive alignment within programs and across units is both possible and reliable, so that we can demonstrate that our graduates genuinely have met the outcomes and have the knowledge, skills, and dispositions that we state that they do, whatever pathway they take through an award and whatever their individual needs are. It also aims to enhance the information provided to students in their unit outlines and to those such as Special Consideration and the Inclusion and Disabilities Service who support students.

The new AI for Educators website (https://bit.ly/educators-ai) gives further details on each assessment category and type and includes examples illustrating how AI and inclusivity can be incorporated as part of the assessment design.

Table 1: The two-lane approach and the Sydney Assessment Framework.

| Secure (Lane 1) | Open (Lane 2) | |

| Role of assessment | Assessment of learning | Assessment for and as learning |

| Level of operation | Mainly at program level | Mainly at unit level |

| Assessment security | Secured, in person | ‘Open’ / unsecured |

| Role of generative AI | May or may not be allowed by examiner | As relevant, use of AI scaffolded & supported |

| TEQSA alignment | Principle 2 – forming trustworthy judgements of student learning | Principle 1 – equip students to participate ethically and actively in a society pervaded with AI |

| Assessment categories |

|

|

Open: scaffolding learning including use of appropriate technologies (Lane 2)

Open assessments are for and as learning. Their primary aim is to develop students’ disciplinary knowledge, skills, and dispositions. They ensure that our graduates have the ability to learn and prosper in the contemporary world through appropriately-designed tasks that support and scaffold the use of all available and relevant tools, including generative AI. The Open assessments are further categorised according to the types and levels of learning, based on Laurillard’s Conversational Framework (2013) and Bloom’s Taxonomy. These task types also align with those used by the NSW Education Authority for school-based assessments. Open assessments should, wherever possible, be universally designed to minimise the need for additional adjustments.

The use of generative AI tools cannot be banned, restricted, or controlled in Open assessments.

The table below summarises the Open assessments according to the type and level of learning they support. Where assessments also involve group work, they should also promote collaboration.

Table 2: Open assessments and the type and level of learning.

| Open assessment category | Type of learning (Laurillard) | Level of learning (Bloom’s) |

|---|---|---|

| Practice or application | Acquisition and practice | Remember, understand and apply |

| Inquiry or investigation | Investigation | Analyse, understand and evaluate |

| Production and creation | Production | Create and apply |

| Discussion | Discussion and collaboration | Understand, evaluate and create |

Although these assessments are embedded at the unit of study level, alignment across the programs that the unit contributes to should ensure that students grow as learners through actionable feedback, the balance of different types of learning, and the staged development of the program’s learning outcomes and skills.

Assessments in this category enable students to acquire knowledge and practice (Laurillard) through checking their knowledge and revising their approach based on feedback. These assessments help students remember and test their understanding of information and apply it (Bloom’s). The use of generative AI tools is fully allowed and these are integrated into the tasks to help students learn by, for example, providing analogies, mnemonics and additional practice problems

Assessment types:

- In-class quiz: Quiz held in a live class such as a tutorial. Used for students to practice, apply or gauge their learning.

- Out-of-class quiz: Quiz held asynchronously including online. Used for students to practice, apply or gauge their learning.

- Practical skill: Development and application of technical, laboratory, creative, professional or other disciplinary skill in or out of class.

Assessments in this category enable students to develop inquiry and investigation (Laurillard) skills and to test their ability to analyse, understand and evaluate (Bloom’s) data, information and sources. The use of generative AI tools is fully allowed and these are integrated into the tasks to help students learn by, for example, conducting literature searches, summarising sources and analysing data.

Assessment types:

- Experimental design: The process of planning and/or conducting investigations, including hypotheses and methods, in or out of class (e.g. scientific experiments, market research, creative testing, etc).

- Data analysis: The process of collecting, analysing, and/or visualising data to generate and communicate meaningful insights (e.g. statistical analyses, qualitative coding, business intelligence, etc).

- Case studies: The process of analysing real-world scenarios to identify problems, propose solutions, and/or justify decisions (e.g. business cases, patient scenarios, engineering problems, etc).

- Research analysis: The critical examination and interpret of research data, methodologies, and findings.

Assessments in this category enable students to apply their knowledge in the production (Laurillard) of artefacts such as reports, creative writing, presentations and performances. The process and the product of creation and applying knowledge (Bloom’s) is assessed. The use of generative AI tools is fully allowed and these are integrated into the tasks to help students learn by, for example, helping brainstorm ideas and by using common productivity tools to assist with writing, drawing and media production.

Assessment types:

- Portfolio or journal: The production and curation of work samples, documentation, reflections, drafts, laboratory reports, and/or other evidence and small writing tasks demonstrating development over time.

- Performance: The creation and delivery of live or recorded performance (e.g. artistic, dramatic, musical, etc) work.

- Presentation: The production and delivery of live or recorded oral, visual, and/or multimedia communications for specific audiences.

- Creative work: The creation and production of original and creative work, including short creative writing tasks.

- Written work: The development and production of structured and/or long form writing (e.g. essay, report).

- Dissertation or thesis: A written manuscript presenting the findings of a substantial original research project. Includes projects completed as part of an honours program.

Assessments in this category enable students to share knowledge, and evaluate and defend their own and others’ ideas and points of view through reflection, discussion and collaboration (Laurillard). These tasks enable students to understand and evaluate their own and others’ ideas and points of view and create shared outcomes (Bloom’s). The use of generative AI tools is fully allowed and these are integrated into the tasks to help students learn by, for example, brainstorming ideas, aiding students write reflections and summarising discussions.

Assessment types:

- Debate: A structured, evidence-based discussion held live or asynchronously where students present and defend positions using research, data, and/or disciplinary knowledge and applying critical thinking and argumentation skills.

- Contribution: Meaningful participation in live or asynchronous environments demonstrating knowledge application, peer engagement, and/or advancement of collective understanding.

- Conversation: A structured or informal dialogue demonstrating disciplinary knowledge, critical thinking, and/or communication skills (e.g. seminar discussions, professional interviews, client consultations, etc.)

- Evaluation: Assessment of the quality of one’s own and others’ work by applying criteria to make informed and objective judgements.

Secured: secure, in-person supervised assessment to validate learning (Lane 1)

Secure assessments are primarily of learning. Although they may also achieve some of the goals of the assessments in the Open categories outlined above, their primary task is to assure learning through controlling the environment in which an assessment is taken. Given the ability of generative AI to complete assessment tasks, commonly this control ensures that generative AI and other tools are not available or are used only in the ways intended by the examiner.

To assure learning at the level needed to provide the integrity required by accreditation (including the Higher Education Standards Framework and professional bodies) and by the community, this control requires in-person supervision with the ability to restrict the use of technologies including devices and wearables such as smart glasses, rings and ear pieces. The difficulty in doing this and the associated costs, inconvenience and intrusiveness means that Secure assessments should be planned at the degree or program level at milestone and summative points, rather than as the responsibility of each contributing unit.

The table below summarises the Secure assessments according to the method of security and who is responsible for applying it.

| Secure assessment category | Security | Responsibility |

|---|---|---|

| Final exam | In person invigilation | Examinations Office with University approved invigilators |

| In-semester test | In person invigilation | Unit coordinators with University approved invigilators |

| In class | In person supervision | Unit coordinators with trained teaching staff |

| Placement, internship, or supervision | In person supervision | Unit coordinators with trained placement, internship or supervision teaching staff |

Labelling an assessment labelled as an exam has a number of policy and procedure implication, such as needing to run in our designated exam periods. In 2024, there were around 1,900 exams with over 220,000 student sittings. The maximum and minimum weightings listed below reflect the need to coordinate these across campus and their likely contribution to the overall assessment weighting. The use of generative AI and other tools is either prevented, restricted, or allowed by the examiner as required.

Assessment types:

- Written exam: Live written exam, written exam with non-written elements, or non-written exam, however administered. Worth between 30-60%.

- Practical exam: Practical exam or practical exam with non-practical elements, however administered. Includes assessment of laboratory, clinical and performance skills. Worth between 10-60%.

- Oral exam: Live oral exam. Worth between 10-60%.

Labelling an assessment as an in-semester test similarly has a number of policy and procedure implication, such as needing to employ trained invigilators in to run them during teaching weeks in the semester. In 2024, there were around 460 in-semester tests with over 67,000 student sittings. The maximum and minimum weightings listed below reflect the need to coordinate these across campus during teaching weeks and their likely contribution to the overall assessment weighting. The use of generative AI and other tools is either prevented, restricted, or allowed by the assessor as required.

Assessment types:

- Written test: Live written test, written test with non-written elements, or non-written test, however administered. Worth between 20-60%.

- Practical test: Practical test, practical test with non-practical elements, however administered. Includes assessment of laboratory, clinical and performance skills. Worth between 10-60%.

- Oral test: Live oral test. Worth between 10-60%.

These assessments are supervised in person, either in regular timetabled classes sessions such as practicals or in other sessions by the faculty or school. The use of generative AI and other tools is either prevented, restricted, or allowed by the assessor as required.

- Interactive oral: Scenario-based conversations to demonstrate, synthesise, and extend knowledge and skills. Unlike an oral exam, oral test or viva voce, this is a practical application of has been learned often with in a real-world scenario.

- In person, practical, skills, or performance task or test: Observation and assessment of live demonstrated practical, skills or performance tasks. Includes tests of clinical, laboratory, field or other skills in supervised environment

- In person written or creative task: Observation and assessment of live written or creative tasks

- Q&A following presentation, submission or placement: Live question and answer session following a live performance, presentation, placement or submission of an artefact. Each of these could be constructed as two separate tasks, such as an Open assessment where a student makes a presentation using generative AI tools and a Secure assessment where a Q&A is used to assure the assessor that the student has the required knowledge.

These authentic assessments occur on placements, internships or other supervised environments such as workplaces. They require live supervision but this can occur as part of the work integrated learning experience. The use of generative AI and other tools is either prevented or restricted by the assessor as required.

- Peer or expert observation or supervision: Live observation by a peer or expert or supervisor on a placement, internship or in another supervised environment

- In person practical or creative task: Live observation and assessment of practical or creative tasks on a placement, internship or in another supervised environment

- Clinical exam: Live clinical exam on a placement, internship or in another supervised environment

Group work

Each of the assessment types listed above could potentially be suitable and selected as a group task.

Assessment maps and program-level design

Identifying secure assessments using our old assessment categories and types is not possible. Full implementation of the two-lane approach to ensure the integrity of our awards at the program-level (e.g. course, major, or specialisation as appropriate to the award) requires first the mapping of assessments to the new framework and then the development of assessment maps and plans for each program.

Moving to the new assessment framework

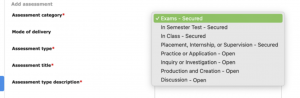

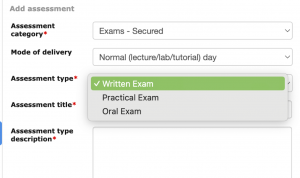

Ahead of semester 2 2025, Sydney Curriculum will be reconfigured with the assessment categories and types listed above. The images below show how a coordinator first picks an assessment category and then is presented with only the assessment types within it. An educational designer will map existing assessment to the likely categories and types in the new framework, confirm this mapping with each coordinator and then update Sydney Curriculum accordingly. Workshops within each discipline will be held to facilitate the constructive alignment of Secure and Open assessments across each program. Consultations will be available for coordinators with assessment expert to provide opportunities for enhancements and redesign, such as incorporation of generative AI tools in assessments and universal design.

Tell me more!

- AI for Educators website. This website contains further details on each assessment category with examples showing how generative AI tools and universal design principles can be incorporated to support students.

- Aligning our assessments to the age of generative AI

- Program-level assessment and the two-lane approach

- Frequently asked questions about the two-lane approach to assessment in the age of AI

- Artificial intelligence and assessment design. This intranet page gives further information on policy changes for coordinators at Sydney.

- Designing for diversity – inclusive assessment

4 Comments