The University of Sydney Doctor of Medicine (MD) Program includes a mandatory 14-week, individual student research project in Year 3 of the 4-year postgraduate program. We have ~300 students per year, 250+ research supervisors and 12 research coordinators across 10 locations in NSW so delivering and assessing these MD projects is no mean feat. As an increasing number of non-HDR research projects are being introduced in our degree programs, we want to share our approach to delivering and assessing research projects for large student cohorts.

Allocating students and staff to projects

MD projects are supervised by academic staff or affiliates. Students are allocated to projects using Allocate! as the system must take into account project preference, project location, and supervisor capacity. The research education content and assessment tasks are delivered via Canvas to both students and project supervisors. Learning objectives are clearly stated and projects are managed by formative milestone tasks that progress the project and map to the learning objectives. The final assessment task is a 3000-word written scientific report.

Grading 300+ written project reports within a 6-week timeframe is achieved using an online process that was developed in collaboration with the FMH Educational Design team using the Student Relationship Engagement System (SRES).

Assessing the final product

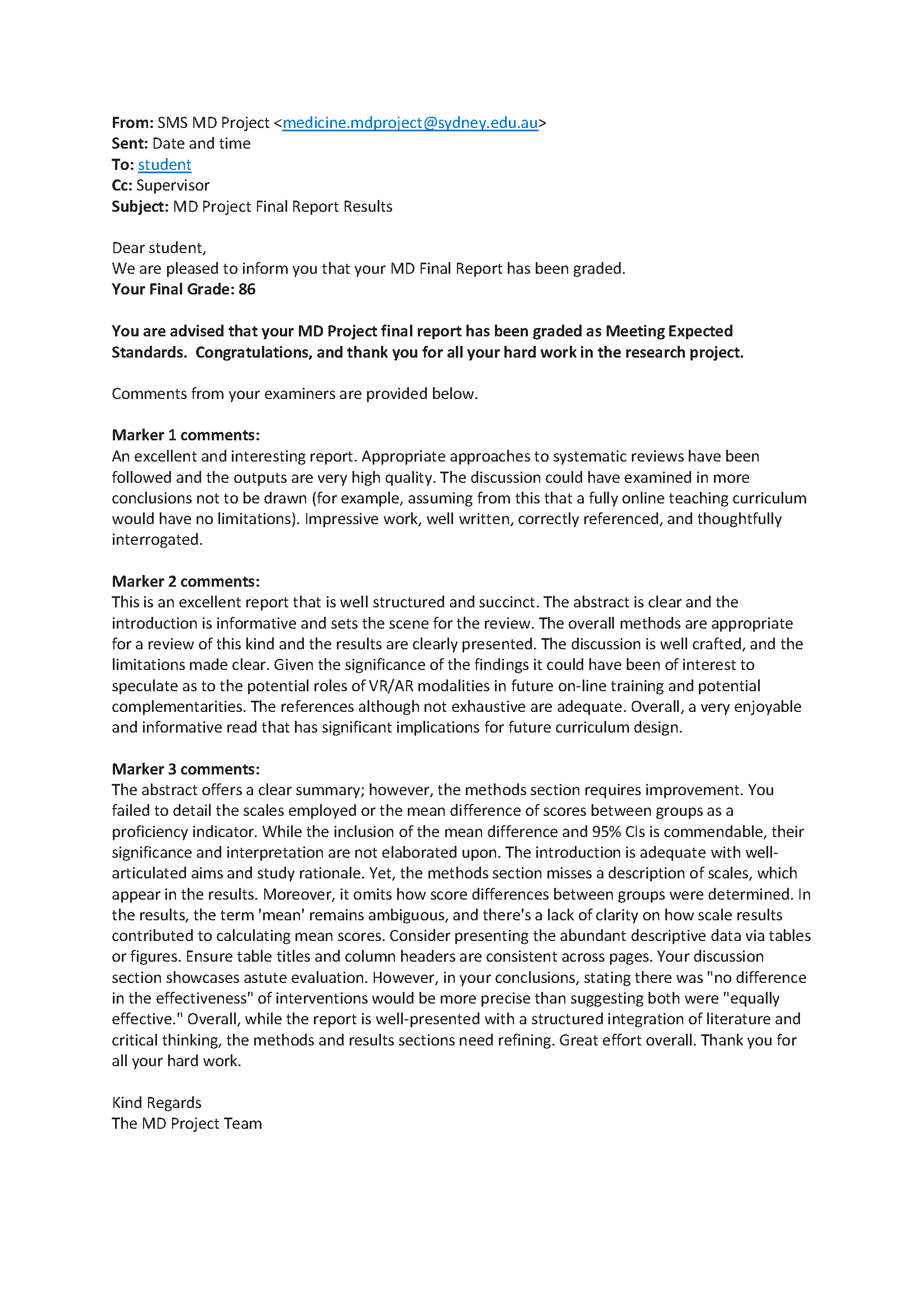

Project supervisors assess up to three final reports. Reports to grade are sent via online links, with regular reminders. Each report is graded by a minimum of two assessors, who are independent from the project. A detailed marking rubric is provided with a thorough description of marking criteria. A final mark is awarded out of 100, and a minimum of 150 words written feedback is requested. Exemplars and video advice is provided to the assessors to benchmark.

Collating the grades

The MD Project team receive grades from assessors via SRES. Reports with grades with greater than a 15 mark difference are sent to a third assessor, and those with very high (>85/100) or fail (<50/100) grades are also sent for a third or further opinion. Once the grades are in, they are averaged and SRES generates a report with the written feedback, which is then sent to the students. Time to grade each report is nominally set at one hour per report, however in practice can vary from 20 minutes to four hours depending on the quality of the report and the experience of the grader. Thus, for a cohort of 300, we expect the grading time commitment to be 600+ hrs of academic time. We ask each supervisor to set aside a half day for the grading in their calendars well in advance so that the grading gets done in a timely manner. It’s no mean feat!

So, how did we do?

So, how did we do?

Before we used the SRES grading process it took us 6 months to grade the MD Project final reports – now we do it in 6 weeks with a minimum of two assessors per student and are able to provide quality written feedback to all students. We compared the grading of the two assessors by project from the 2019 and 2022 MD project cohorts by calculating intraclass correlation coefficient (ICC) comparing total mark as well as marks awarded for individual components. There was no significant difference in the mean of total marks awarded by first (73.9+/-10.9) and second assessor (74.6+/-11.7, P=0.341), however only a moderate ICC (0.508 (95% CI: 0.411-0.588) was seen for total marks. Low to moderate ICC was seen for individual components of the report with the highest correlation seen for discussion section and lowest correlation for the presentation sections of the report.

We also generate a feedback report for the assessors, separate from the SRES system, showing how their grading compared to the cohort and the other grader. These reports are gratefully received by the assessors, assist with our benchmarking, and adds to the training of the assessors.

Managing and benchmarking final report grading across a large research project program is challenging, however using SRES streamlines the process and enables automated feedback to the students. Future directions may include further assessor training to improve inter-rater reliability, as standard benchmarking processes are not feasible with 250+ assessors in this timeframe. Seeking student and examiner feedback on the grading process will also be explored.

Tips and Tricks:

- Managing a large multi-marker assessment requires a team effort from the academic, educational design, and professional support staff.

- Provide your markers with a quick video to orient them to the marking interface, the rubrics and required standards to keep them on the same page.

- Have a manual marking form back-up as occasionally accessing the Uni systems can be problematic (eg clinician affiliates often encounter hospital fire-wall problems); this helps ensure a smooth completion of the grading.

Please get in touch with us if you would like more info: [email protected]

Thanks to the whole team from eLearning: Tonnette Stanford, Sasha Cohen, Daej Arab and the MD Project team: Sally Middleton, Richmond Jeremy, Rajneesh Kaur.