ASCILITE 2018 was held at Deakin University from 25-28 November 2018. The annual ASCILITE conference brings together academics, developers, researchers, and technologists from around Australasia to discuss pedagogy and educational technologies. Some of the Educational Innovation team went, and we summarise here some key presentations around building students’ study skills, promoting evaluative judgement, and designing authentic assessments that discourage online student academic dishonesty.

This article was contributed by Ruth Weeks, Samantha Haley, and Adam Bridgeman.

Shifting our focus: Moving from discouraging online student dishonesty to encouraging authentic assessment of student work

This panel was presented by Carol Miles and Keith Foggett, University of Newcastle.

Carol and Keith presented a very inspiring session on the use of authentic assessment to discourage academic dishonesty. They talked about the fact that the number of students committing academic dishonesty is a relatively small percentage (between 1 and 8%), but that universities are increasingly focussing on attempts to conquer dishonesty that actually prevent the use of authentic assessment – quite ironic really!

They mentioned the fact that academic essays are, in fact, one of the more likely types of assessment for students to plagiarise, as it is fairly simple to buy an academic essay. In addition, high stakes assessments that carry a high percentage of an overall grade and that haven’t been adequately scaffolded may induce normally honest students to consider plagiarising. Instead, they provided a number of examples of assessments that were more authentic and less likely to involve academic integrity issues:

- Science in the news – find evidence in literature for news release claims

- Evaluate a website or create a webpage – on a topic related to course content

- Follow a piece of legislation through government processes – what groups are lobbying for/against it and why?

- Prepare for a hypothetical interview – students do background research on a company or job and how they fit the position description

- Compare and contrast a scholarly journal article with an article from a popular magazine

- Design a theme for a conference – describe why a topic would be of interest to experts in the field

- Compare and contrast the ways different disciplines deal with the same subject matter

So, by replacing traditional academic essays with assessment tasks that are more authentic, we can make it far harder for contract cheating to occur and offer students assessment tasks that are more relevant to their future workplace needs.

Read more in the paper available here on page 562.

Evaluative judgement and peer assessment: Promoting a beneficial reciprocal relationship

This talk was presented by Joanna Tai, Deakin University.

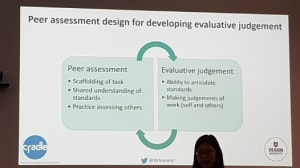

Joanna presented a succinct but powerful session on the importance of developing evaluative judgement skills in our students. The term evaluative judgement refers to the ability to accurately judge the quality of self and others’ work and therefore requires a deep understanding of quality, in a holistic sense. Evaluative judgement capability is vital to succeed in the workforce as we are required to make judgements on the quality of our work on a daily basis. Joanna stated that evaluative judgement requires discipline-specific “content knowledge, skills, attitudes and dispositions”.

Any of the following can and should be used to facilitate a better understanding of ‘quality’ in the disciplinary context and therefore assist in developing a student’s evaluative judgement skills:

- Exemplars

- Rubrics

- Self and peer assessment

- Feedback

- Portfolios and reflective work

There is much evidence to suggest that a well-designed peer assessment task may be an effective way to develop the skills needed to identify and judge the quality of a piece of work. As illustrated, Joanna suggested the task must initially be strongly scaffolded, with clear guidelines regarding expected standards, and multiple opportunities to practice.

There is much evidence to suggest that a well-designed peer assessment task may be an effective way to develop the skills needed to identify and judge the quality of a piece of work. As illustrated, Joanna suggested the task must initially be strongly scaffolded, with clear guidelines regarding expected standards, and multiple opportunities to practice.

She states that the tools within our learning management systems can be used effectively to support the development of evaluative judgement through the readily-available discussions, the sharing of rubrics and exemplars, and the ability for co-creation of artefacts, as well as the longer term benefits of becoming a resource that facilitates reflection.

Read more in the full paper available here on page 516.

Ready to Study: an online tool to measure learning and align university and student expectations via reflection and personalisation

Presented by Logan Balavijendran and Morag Burnie, The University of Melbourne.

Logan and Morag from the Academic Skills team at Melbourne presented on a module developed to prepare new students for the expectations and style of learning at university. In the module, produced using SmartSparrow, students are presented with a number of typical scenarios that they might face during a semester. Using an adaptive learning approach, the scenarios are personalised to each student’s course. After answering questions about how they would act (e.g. “you might have a lecture in your course, how would you take notes?”), students receive instant and personalised feedback based on their responses together with recommended developmental resources.

The module has proven to be effective in providing students and staff with a diagnostic tool to understand knowledge and skills as well as assumptions and preconceptions on the way to study at university. Analysis of the results will inform the development of resources by the Academic Skills. The module was deployed in the learning management system at Melbourne for both undergraduates and fully online graduate students. It forms part of the Ready to Study project.

To learn more about the module and project and to try a demo version, visit the Ready to Study website.

Read more in the full paper available here on page 35.