In Semester 1, 2024, I coordinated FRNC2603 French 3, a core language unit in the Introductory and Intermediate French and Francophone Studies major and minor. The current iteration of the unit is based around a student-centred, task-based pedagogical approach called global simulation. Developed by Francis Debeyser in the 1970s, the approach is best understood as an extended role play in which the classroom becomes a specific place – in this case an apartment building in present-day Paris – and the students create an avatar who is a resident of the building. Students remain in character for the entire semester, interacting with other residents and learning about Paris in the leadup to the 2024 Summer Olympic Games. The unit’s objectives are to develop students’ French skills across four competencies: listening, speaking, reading and writing.

The dilemma

With student use of generative AI being inevitable and ubiquitous, I had two objectives. Firstly, I wanted to take advantage of the introduction of generative AI, through Cogniti, as a tool available to all students by embracing and authorising its use in the unit’s learning activities in a controlled way. Secondly I wanted to help students familiarise themselves with generative AI and critically evaluate its benefits and pitfalls. How could I encourage students to use AI ethically and responsibly, and at the same time facilitate improvement in writing style and accuracy throughout the semester? How could students receive regular, personalised feedback on their writing in a time-efficient manner? How could I ensure that learning was taking place and that students’ writing skills and grammar application were improving? How could I be sure to adhere to the University’s guidelines on the use of generative AI tools for marking and feedback?

A scaffolded solution

The four-step process I developed incorporated both lane 1 and lane 2 formative and summative tasks and used Padlet and Cogniti. Although developed for a specific language and a specific unit, the approach is easily transferable to writing activities across a range of disciplines. It builds on a pre-generative-AI learning activity that involved formative peer evaluation of classmates’ writing.

The scaffolded approach aimed to provide students with regular writing practice and personalised feedback on demand about their grammatical accuracy, syntax, and vocabulary use. This process was used weekly throughout the semester and in the final oral summative assessment task.

- In Step 1 (in class), students reflected in writing about a particular theme (developing their Parisian persona; inventing gossip about their neighbours; complaining about the inconveniences of living in Paris in the leadup to the Olympic Games; describing their sporting heroes; relating Olympic scandals etc) on a Padlet for 15 minutes without using generative AI or neural machine translators.

- In Step 2 (after class), students copied their Padlet text and pasted it into Cogniti, asking the AI to correct and explain errors. Students were not given specific prompts to use with Cogniti; they were encouraged to experiment to learn what produced the best output. For this, we used the ‘vanilla GPT-4’ Cogniti agent which gave students equitable access to the raw power of GPT-4.

- In Step 3, students pasted the AI-edited version of their text back into the same Padlet post and reflected in writing on their errors and the AI’s suggestions.

- In Step 4, tutors read the weekly posts, and discussed the AI’s outputs collectively in class. Students shared their prompts, which encouraged a conversation around the best prompts to elicit the desired output. This reassured students that tutors were interacting with their work, and helped students to reflect critically on how generative AI can facilitate their language learning, and on the dangers of hallucinations.

This four-step process helped maintain engagement through the provision of regular, instantaneous AI feedback. The formative writing activities counted towards students’ participation grades, and helped students prepare for their final oral summative task. The final oral task had a dual format: (i) a pre-prepared dialogue in pairs based around a specific image, followed by (ii) an unscripted individual Q&A session with their tutor that stemmed naturally from the dialogue. The pre-prepared dialogue was a lane 2 activity submitted to Canvas, incorporating the same process that was followed in class during semester: an initial version that did not use AI, and that was then submitted to AI; AI’s response including corrections and students’ reflections to demonstrate their understanding; and the final version. The initial version and the students’ reflective piece were weighted heavily to emphasise the importance of careful drafting and critical analysis.

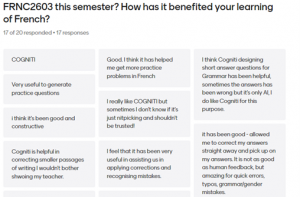

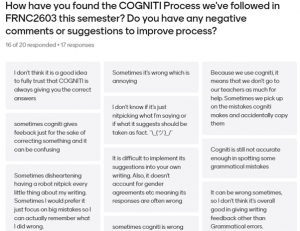

The student perspective

Students found the process constructive and learned to recognise AI pitfalls – as per their feedback given via Mentimeter. Students appreciated the immediacy of the AI and recognised that there were limitations to the AI’s capabilities, for now.

The teacher perspective

Pro: The AI-enhanced editing and feedback process was efficient and timesaving. It is an improvement on the peer-evaluation activity conducted prior to the emergence of ChatGPT because the AI, although not fool proof, is generally better at spotting and explaining students’ errors in writing and grammar than their classmates. As participation in each step contributed to their end-of-semester grade, the process helped maintain student engagement throughout the semester.

Con: Students sometimes took shortcuts and did not reflect deeply on AI’s suggestions and corrections.

The next iteration

Padlet-Cogniti 2.0 will involve an additional step at the end of the semester, designed to encourage critical reflection on learning throughout the unit. Students will combine their Padlet posts into one document, and submit a self-assessment of their writing progress throughout the semester.

For improved AI agent performance, we could create a specific Cogniti writing agent for this unit, which would tailor the process to the level of student competency and render the feedback more relevant.

This approach can be applied to any discipline. It’s a useful way to help students understand the pros and cons of using AI and critically analyse AI’s outputs.

1 Comment