Commonly held beliefs about multiple choice questions and other question types that can be marked by a computer are that they can only really test memorisation and surface-level knowledge, and writing a number of powerful questions takes an awfully long time. In August, Educational Innovation hosted Sam Haley, Sharon Herkes, and Dane King from the faculties who ran a workshop to provide their time-saving tips for writing meaningful multiple choice and other questions. We’ve summarised their approaches into this short article, and included a recording of their session and their slidedeck for those who might want to follow up further.

Important factors to consider with automarked questions

Automarked questions provide a lot of flexibility for you and your students – you can provide virtually unlimited practice opportunities accompanied by rich feedback, and students can access this at times convenient for them. For summative assessments, they provide a way to efficiently gauge student understanding and achievement. When building these questions, it’s important to think about the how and why carefully:

- Do they align with the unit learning outcomes and learning activities? For example, if you want your students to be able to analyse and evaluate concepts, do your quizzes just require memorisation and recall?

- How do they fit in with the rest of the curriculum? For example, if they are used to encourage students to do some pre-work, are the expectations and especially the connection to class content made clear?

- What information can you as the teacher get from the quizzes with automarked questions? For example, if you write the questions to address and surface various misconceptions, obtaining some simple statistics of how your cohort answered each question may help you to target your in-class teaching.

- What feedback are students provided with after they complete the questions? Automarking can also mean autofeedback – are you providing students with some sort of feedback to guide their development after quiz completion (a mark/grade doesn’t count)?

How to write lots of meaningful questions very quickly

Considering the options

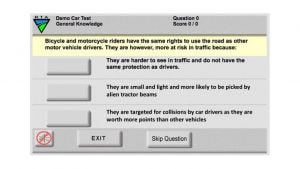

A question is made more powerful by the stem and options available. Sharon and Dane presented three alternatives for a question from a driver knowledge test.

A question is made more powerful by the stem and options available. Sharon and Dane presented three alternatives for a question from a driver knowledge test.

Question: Bicycle and motorcycle riders have the same rights to use the road as other motor vehicle drivers. They are however, more at risk in traffic because…

Alternative options set 1

- They are harder to see in traffic and do not have the same protection as drivers.

- They are small and light and more likely to be picked up by alien tractor beams.

- They are targeted for collisions by car drivers as they are worth more points than other vehicles.

Alternative options set 2

- They are harder to see in traffic and do not have the same protection as drivers.

- They are careless and do not obey road rules.

- They ride too fast and do not turn their lights on.

Alternative options set 3

- They are harder to see in traffic and do not have the same protection as drivers.

- They are easily visible in traffic due to their bright helmets which gives them greater protection than other drivers.

- They are harder to see in traffic but they have helments which give them greater protection than other drivers.

Which of these sets would be the most effective at testing the knowledge expected in the question? To answer this, we need to understand what the question was seeking to test. Set 2 contains the options used in the actual test – from this, it’s clear that the question writer wanted to test learners on the concepts of visibility in traffic, as well as level of protection of motorcycle riders. Given these two concepts, set 2 doesn’t contain the most meaningful range of options because B and C are obviously incorrect – not unlike the hilariously incorrect options in set 1. (At least in set 1, the longest option is not the correct one). However, the options presented in set 3 are optimal because they all seek to test learners’ understanding of the two key concepts of visibility and protection.

How can this be applied to your own unit?

A tried-and-tested approach

Sharon and Dane recommended an approach that they have used many times to quickly generate many meaningful questions. Its effectiveness comes from different permutations of potential options.

Step 1: Identify a topic

Start with one that aligns to a learning outcome. For example, LO18.4 Explain the effects different cultivation methods have on the number and weight of tomatoes grown.

Step 2: Identify some true statements related to the topic

For example:

- The growing season for tomatoes is proportional to the length of time daytime temperatures remain above 20°C

- The weight of the tomatoes is proportional to the amount of water provided

- The yield will increase with longer exposure to sunlight

Step 3: From these statements, create true options

For example, for the first statement above, a true option could be that “Increasing the duration of the growing season would increase the number of tomatoes produced”.

Step 4: From these statements, create false options

For example, for the first statement above, a false option could be that “Increasing the duration of the growing season would decrease the number of tomatoes produced”.

Step 5: Write a stem based on the topic

From the learning outcome, the topic we are trying to test here relates to improving crop yields and the cultivation methods that may help. A possible stem might be: “The health and yield of a tomato plant is determined by factors affecting the germination and growth of seedlings and the length of the growing season. Which of the following statements regarding cultivation methods is correct?”

Step 6: Assemble the different combinations

If you wrote, for example, three statements in step 2, and then followed this up with one true and one false option for each of the three statements, you should now have six options to use. For a multiple-choice question with four options, you can create a number of varieties just by swapping options in and out.

Check out the slidedeck and the recording (both linked at the bottom of this article) for more in-depth examples.

How to implement this in Canvas

Part of Strategy 5 calls for the “greater use of pre-readings, pre-recorded videos, and brief, ideally automated, diagnostic assessment of understanding of core concepts and themes”. Most Open Learning Environment units will also have a substantial component of assessment that is delivered and automarked online. As we move to Canvas as the University’s single learning management system, you can take advantage of Canvas’ intuitive and powerful (and fast!) quiz engine to randomly deliver questions from your pools of questions generated using the above approach. Here is one approach:

- Generate a set of questions (e.g. a set about pandas, and another set about koalas) – e.g. use the permutation approach above to create these questions with ease.

- For each set of questions, add them to a separate Canvas ‘question bank’. Here’s a useful guide from Canvas to do this. In this example, we’d now have a question bank in Canvas containing the questions on pandas, and another question bank about koalas.

- Then, create a Canvas quiz that will draw questions randomly from the two question banks. Say we want to create a 6 question quiz: 2 questions about pandas (drawn from the panda bank), 2 questions about koalas (drawn from the koala bank), and 2 questions about… buffalo that will always be shown. Our Canvas quiz will need to have two ‘question groups’, which each pull two questions from each question bank (see screenshot), as well as two fixed buffalo questions. Here’s another useful guide from Canvas that explains these steps in more detail.

Using this powerful combination of question banks and linked question groups, you can easily make Canvas quizzes that draw from a pool of questions. This not only helps with academic integrity obligations, but also gives students exposure to multiple ways of expressing equivalent knowledge.

Don’t forget

Here are some of Sam, Sharon, and Dane’s must-dos:

- Align the question with learning outcomes

- Create pools of questions, add them to appropriate Canvas question banks, and use these to populate quizzes

- Leverage common misconceptions to help gauge understanding and guide learning

- Use the Canvas Rich Content Editor to add rich media like images, videos, links, etc for more authentic scenarios in questions and options

- Provide quality feedback by reinforcing and acknowledging achievement (for both correct and incorrect responses), and providing supplementary resources/followups

- Don’t use no double negatives

- Keep the language simple to ensure you are testing for conceptual understanding, not English comprehension

- Avoid ‘all of the above’ and ‘none of the above’

- Check if the longest option is correct – it usually is (but shouldn’t be)

Tell me more!

- Access a recording of the session.

- Download the slidedeck from the session.

- Dane’s highly recommended paper on evaluating the quality of individual questions.