Like many educators, unit coordinators Dr Carlos Vazquez and Dr Zeerim Cheung have been experimenting with strategies to help students deepen their critical thinking while embracing generative AI in their assessments.

To address this challenge in International Risk Management IBUS2103, Carlos and Zeerim are progressively redesigning an individual essay assignment, where students evaluate and critically reflect on their approach to an earlier group assignment. The group assignment asks students to assess a company’s current and future risks while recommending a risk management system. The individual essay that follows is an evaluation of what individual students might have done differently in the group analysis. This helps students reflect on their learning and to make academic judgments about their own and others’ work (Carless & Boud, 2018). Their work is informing a Strategic Education Project at the University of Sydney Business School that focuses on reimagining written assessments in the age of generative AI.

Semester 1, 2024 – Iteration 1

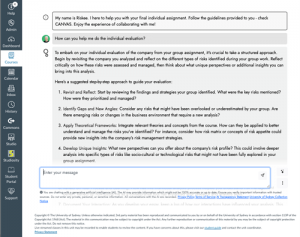

First, Carlos configured a Cogniti AI agent called ‘Riskee’ to help students develop their thoughts and arguments for their individual essay. In tandem, guidance for students on how to engage with generative AI was developed and facilitated in tutorials, alongside the Canvas site Using Generative AI at Sydney. Riskee answers questions about the essay very patiently!

Students could choose whether to complete the assignment with Riskee. Those that opted into using Riskee had detailed criteria in the rubric for its use. Students were also required to submit all logs from their chats, as well outline the type of collaboration they engaged in (e.g. generating ideas, offering different perspectives on a problem etc).

Unfortunately, very few students opted in to using Riskee in this first iteration. It was early days in the adoption of generative AI and some students may have been concerned about inadvertently breaching academic integrity. Others may have found the extra work required to support their arguments onerous. Others may have felt that using the University-provided AI agent could lead to surveillance from teachers.

Semester 2, 2024 – Iteration 2

After reflecting on this first iteration, Carlos and Zeerim were curious whether the extra work required of those that opted to use Riskee was a deterrent, or whether students were concerned about academic integrity issues with generative AI more broadly. After all, a recent survey of student perspectives on generative AI in higher education shows that 91% of students are worried about breaking university rules.

They decided to refocus the assignment, with the support of the Business Co-design team. It was assumed that generative AI was likely to be used by all students in one shape or another. Once again, guidance on generative AI was given during the lecture and extra material shared with tutors to help them answer potential questions from students. Students were encouraged to engage with Riskee and keep their conversation logs but it was no longer a mandatory appendix to add it to their essays.

The writing instructions and rubric were rewritten to grade based on reflection on their research and writing process, with or without the use AI tools, that goes beyond decontextualised, generic responses. While AI can easily generate polished outputs, students need to be able to integrate and evaluate their own contribution and group work performance, as well as other texts and learning experiences (Bearman et al., 2024). After all, this kind of critical thinking would also be required in the workforce, whether supported by generative AI tools or not. Developing structured rubrics and guidance for the assessment are designed to help students develop evaluative judgement (Tai et al., 2018).

Carlos and Zeerim have shared their assessment description and rubric for others; you can download a copy.

They found that removing the burden of reporting AI usage seems to encourage more students to be transparent about their practices. For example, around half of the students reflected on how AI supported their analysis, and about a quarter explained why they chose to complete their assignments without it. Still, a significant portion of students didn’t comment on their AI use (or non-use), despite our explicit instructions in the assignment guidelines and marking rubric.

Concerns about inadvertently breaching academic integrity rules persisted, with several students voicing unease throughout the semester. Those who did reflect on their AI use, mostly described it as a tool for generating ideas. But some student work with AI was exceptional. One student, for example, applied the ISO 31000 Risk Management Process to their analysis, using AI to generate a Business Model Canvas, evaluate risks, and critically refine inputs across multiple steps. This iterative process, guided by human intervention, demonstrated a deep mastery of how AI can enhance a student’s critical analysis and assignment output.

Iteration 3?

For 2025, the International Business team will analyse the student responses to Riskee and the changed assessment and further innovate their approach. We can’t out-run generative AI but we can continue to learn from our students and refine our assessment approaches.