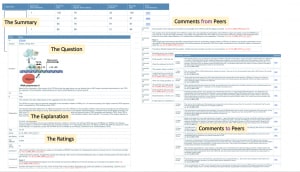

PeerWise is an award-winning online platform that helps students engage with unit concepts more deeply and critically. It does this by providing a space where students can collaboratively create, answer, discuss, and evaluate practice questions with peers. As a free tool, it’s currently in use by over half a million students in 80 countries, and was created by a computing academic, Paul Denny, from the University of Auckland. Educational Innovation will soon be making PeerWise generally available across the University’s Canvas sites, so here’s a sneak peek at how academics from across Sydney have been using PeerWise since 2012 to engage students and enhance their learning outcomes.

This article was contributed by Samantha Clarke and Danny Liu.

The Chronicle recently wrote about US academics who asked their students to write multiple-choice questions for each other. But these weren’t run-of-the-mill MCQs – students were introduced to Bloom’s taxonomy, asked to write questions that integrated multiple concepts, and contributed their questions to a formative test bank. The results showed increased learning outcomes for students, particularly those in the middle performance bracket. These academics weren’t using PeerWise and so had to follow a manual workflow, but PeerWise makes this process much more streamlined, efficient, and, dare we say, fun for academics and students. There’s a growing body of literature that evidences its positive impact on student engagement and success, and here we highlight stories from some Sydney academics.

Gareth Denyer and Dale Hancock, School of Life and Environmental Sciences

Gareth first came across PeerWise from a colleague’s presentation in 2014. “I had always been keen on designing assignments that allowed students to contribute to their learning, rather than everyone spending time on the setting, completing and marking of ‘dead’ tasks. It struck me immediately that PeerWise would provide a rich seam of new ideas for questions, would give me extensive intelligence on student misconceptions and would tell me how my teaching was being received – all way before the end of semester exam.”

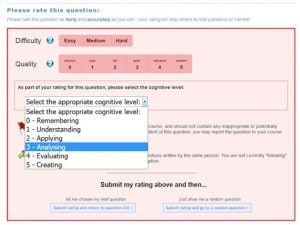

Gareth and Dale have used PeerWise in a number of ways, including allocating topics to students and asking them to write against Bloom’s taxonomy. Allocating nominal assessment marks, they have shown that it improves student performance and understanding across the whole cohort: “not just for the naturally engaged student but, most tellingly, for those traditionally considered to be ‘at risk’. It forces interaction with course material during the semester and the regular short cycles of activity create a manageable but incremental momentum in participation”. Because his students can write thousands of questions in any one semester, it not only provides him windows into how they think and what they value, PeerWise also gives students a place to practice giving, receiving, and responding to peer feedback in a constructive manner.

It forces interaction with course material during the semester and the regular short cycles of activity create a manageable but incremental momentum in participation

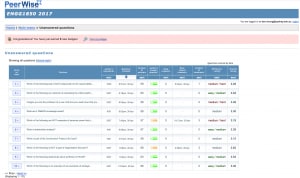

“On top of producing banks of questions which are FAR superior to anything I get from my colleagues, PeerWise delivers a wealth of metrics and statistics related to student engagement and performance.” Gareth likes to sit back and reflect on the “stupendous” and “viral-levels” of engagement that PeerWise enables.

Bryn Jeffries, School of Computer Science

Bryn started using PeerWise after inheriting a unit where many students weren’t completely pleased with assessment questions available on the LMS; there were also some creeping academic integrity issues with questions being re-used through the years. But, like Gareth, one of his main concerns was being able to understand the unit from students’ perspectives: “I was conscious as we as educators master the topic we teach, we lose our ability to place ourselves in the position of a student approaching the topic for the first time. I wanted to include questions that helped overcome threshold concepts, and the best people to identify these are the students themselves. PeerWise addresses this by allowing students to contribute their own questions.”

He speaks of his journey with PeerWise, starting with a not-so-successful trial. His first attempt involved seeding PeerWise with some staff-generated questions, and encouraging students to add questions by awarding assessment grades. But the way that he calculated these grades (using students’ peer ratings and how many answers they completed) led to students gaming the system and an overflow of low quality, duplicate questions.

Taking stock of this, he worked with Paul Denny to try something different. “To improve expectations of question submissions I introduced Bloom’s taxonomy of learning levels in the first lecture. Paul added a feature to our PeerWise site so that students could specify the intended learning level of the question, and peers could label the question with the level they believed it reached. At the end of the semester each student selected a portfolio of their best three questions for review by their tutor, and these were marked by the tutor based on the quality and learning level, rather than relying on peer scores.” This resulted in a much improved quality of submitted questions, and more positive student feedback. Bryn reflects that PeerWise works best when students feel part of a community when generating and engaging with each other over their questions.

Miguel Iglesias and Alex Mullins, Sydney Medical School

“Good learning requires active engagement with the material, and teaching – or assessing – someone is the best way to learn.” Miguel and Alex and the medical program needed a way for students to asynchronously engage with their peers in a meaningful way, and develop deeper understanding of a wide range of content. “By asking them to write MCQs, students need to know the topic of the question very well, particularly to write good distractors. PeerWise is a great tool to get to that higher level of learning. Secondly, for my students in particular, they are preparing for a tough exam by a medical board. The official questions are not released, so they never have enough material to practice. Getting all the students to write high-quality questions gives them more opportunities to assess their learning even after they completed my unit of study.”

Miguel and Alex report consistent and increasing improvements in student engagement with each cycle of use, with students who are familiar with PeerWise getting more out of it. “Students who engage lightly with Peerwise often see this as an interruption to their studies. It is only towards the end of semester, when they are preparing the final exam, or even when they have finished the course, when they realise the full power of it as a learning tool. The students who engage from the beginning and write great questions, interact with the peers’ comments and take time to comment on someone else’s questions are the ones who truly get the benefit of this activity, and one can see that their mastery of the topics is at a superior level.”

From their experience, they provide a number of practical suggestions for encouraging more meaningful student engagement:

- PeerWise is more valuable when teachers also engage in the platform, providing guidance and feedback to students

- If possible, use PeerWise consistently in a program of study (not just one unit) to grow familiarity

- Motivate students by using high-quality student questions in exams

- Consider enforcing referencing in question writing and critiquing – Alex suggests this so that “students cannot make unsubstantiated claims but instead must back everything up with references to current medical literature which is great for knowledge retention; this works really well in the medical field but could apply to many other subject areas”

- Require students to explain distractors; e.g. (A) is incorrect because [reason/evidence]: “This steps the other students through their thought-process and helps them understand why they answered incorrectly. Students adapt quickly when given feedback on their question writing style and show distinct improvement in subsequent cycles.”

Louis Tarborda, School of Civil Engineering

Like the other academics here, Louis wanted to use PeerWise to gain an insight into how students were thinking. Being able to build a repository that might be used in future was also a bonus. His first attempt at using PeerWise was instructive – he required students to contribute 8 questions each, but only used PeerWise for one assessment, leading to many students submitting a large number of lower-order (recall, memorisation) questions. Upon reflection, Louis thinks that students were seeing PeerWise as merely an activity rather than a learning opportunity, and he is looking forward to adapting his PeerWise experiment with other cohorts, perhaps with better coordination and a multi-stage approach to assessment.

Ken Chung, School of Civil Engineering

After seeing PeerWise during the First Year Coordinator’s Program in 2017, Ken was immediately struck by how PeerWise might help his students participate more in his unit – and have a chance to write their own exam! “I implemented the idea right away as there was a mid-semester quiz coming up which was in multiple choice exam format. I asked students how they would feel if I were to allow them the chance to write their own multiple choice questions. If they were really good and challenging, they would make it to the list of 40 MCQs. In a sense, I was asking them to help me help them.” Ken was surprised by the uptake by students, finding that they were active in writing their own questions, providing answers and feedback, and also engaging with other students in challenging and correcting questions and answers. He ended up using five student-generated questions in the exam, and students appreciated the opportunity to contribute to the unit, leaving USS comments such as, “you were very positive and encouraging for us to participate – like through PeerWise”.

Tell me more!

- Paul Denny, the creator of PeerWise, is coming to Sydney on Monday 27 May. Gareth Denyer has organised a morning symposium to hear from academics around Sydney as well as Paul. Find out more and sign up today!

- Check out the official PeerWise documentation for instructors and students to see how easy it is to use.

- Read up on the ‘testing effect‘ and how having students retrieve and apply information significant enhances learning.

- We have an active, approved human research ethics ‘umbrella’ application which gives teacher-researchers at Sydney an opportunity to conduct educational research into the effects of PeerWise. Get in touch with Danny Liu to join the consortium.

1 Comment