As if having a good idea and implementing it into your teaching isn’t challenging enough, you will also often be asked how you can establish that your idea works. If your answer is “randomised controlled trial” (RCT) you are not wrong, but you created yourself a lot of work. Such a comparison between minimally two groups–one that participates in the new form of teaching and one that serves as a control and does either ‘nothing’ or participates in the ‘old’ pedagogy–are difficult to conduct in universities. Not only are there ethical issues–and you will need ethics clearance because you are treating your students differently–there are also many practical problems, such as that students might talk to each other across groups. While there are solutions to these challenges and while RCTs are frequently conducted in education, they require substantial effort, take a long time to plan and set up, and may come with high costs. You may, therefore, choose not to go down this path, and you really shouldn’t if you cannot realise truly randomised allocation of students to groups.

A more feasible and practical approach builds on the comparison between cohorts: If the course was taught last time (semester, year) the old way, and this time in a new way, then we can make a comparison between these two cohorts, relying on marks and perhaps course evaluation data. While clearly feasible—and not requiring ethics clearance as long as you do this for improving teaching, not for publishing papers—this approach has a number of problems. The crucial one is that due to the absence of randomisation there may be (many) factors other than the teaching innovation that can account for the differences between the two cohorts. And while one can use statistical forms of “controlling” for those factors to some extent, this design will always be less conclusive than the RCT. The problems of this design are compounded if we fail to choose appropriate outcome measures to compare to gauge the impact of the intervention. In general, the more specific the relevant information from the last cohort is—such as a score on a specific aspect of the course rather than the overall mark—the better; end-of-course marks are likely to be affected by many factors besides the intervention.

The third option—Single-Case Design (SCD) — is practical yet valid at the same time. In SCD designs, subjects act as their own control. SCD builds on the logic that an intervention should have predictable effects on one or more observable variables which are often behavioural, though not necessarily.

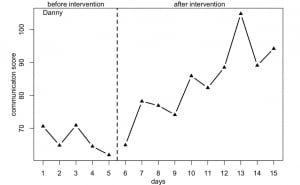

To the extent that a variable can be measured (observed) before the onset of an invention then during or after the intervention, it is possible to draw conclusions about the effectiveness of the intervention. The phase before the intervention is called the base-line, or A phase, while the post-intervention phase is called the B phase. Many forms of learning interventions can be studied through the lens of an A-B design as long as it is feasible to record at minimum 5-7 repeated observations/measurements in each phase. The observations are preferably behavioural but can also be ratings on a scale, quiz scores, etc. The Graduate Qualities, for instance, can all can be described in terms of observable behaviour. Decisions regarding the extent of the change caused by the intervention can be made based on visual inspection of the A-B graph or statistical tests. The fact that the data come from single cases means that the observations are useful for students as well; they can directly see the extent of their learning over time.

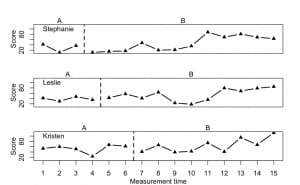

In university teaching, one usually aims for increasing knowledge (skill, understanding) and for reducing the frequency of misconceptions and mistakes, and one aims for these effects to be stable: i.e. students do not revert to the baseline. The SCD design that is appropriate in such cases is the multiple-baseline design; multiple students going through the same intervention shortly after each other. This design can increase the confidence that the effect is due to the intervention. And if the cases are randomly selected from a student cohort, then one can use inferential statistics to draw conclusions about the whole cohort based on a sample of students.

In conclusion, Single-Case Designs are comparatively easy to apply, highly informative for designers, teachers, and students, and can be learned without requiring a degree in statistics.