By 2024, we faced a familiar challenge around assessment integrity: quiz answers and podcast assignments came back polished and well-produced, but we needed to see the critical thinking behind them. We wanted assurance that students could reason through problems, not just generate good outputs.

So we turned to live conversation, a format that values the thinking process itself and lets students demonstrate their understanding in real time.

Previous posts on this blog have explored Interactive Oral Assessments (IOAs) in both writing and French studies. Our experience adds a new dimension: implementing IOAs in a STEM context, a second-year microbiology and immunology unit, at a scale of over 400 students. Here’s what we learned.

Our IOA in brief

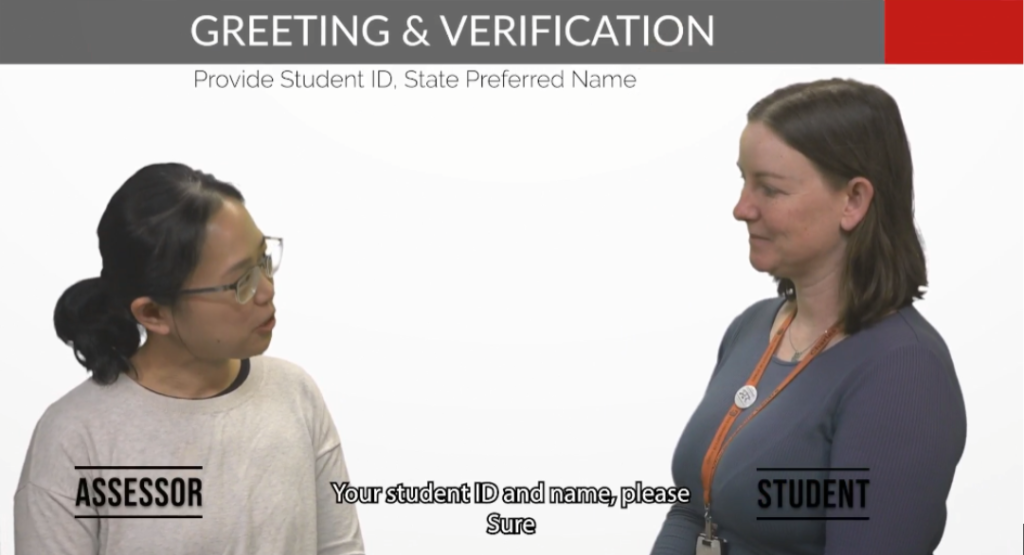

Our IOA is a structured 10-minute conversation where students explain concepts to an assessor. The conversation flow is structured but unscripted. The assessor works with each student to bring their best understanding out while also surfacing knowledge gaps and guiding reasoning further.

It’s less like a traditional oral exam, focused on recitation, and more like a professional dialogue: explaining a case to a colleague, reasoning through a problem together.

We wanted to see how students apply knowledge, explain their understanding, and arrive at conclusions. We wanted to see the process of thinking, not just final answers.

How we structured the IOA

For practical reasons (time and budget!) conversations needed clear structure and assessors needed confidence guiding the dialogue. We designed a progression from prepared material into genuinely unpredictable reasoning, this means that students could prepare using pre-released scenarios, but follow-up questions and curveball challenges required them to think and apply knowledge on the spot.

The conversation moved through six segments revolving around a case study: a microbiology segment assessing foundational knowledge, a follow-up probe (“why do you think that?” or “what about X?”) revealing depth, an immunology segment covering the second content area, with an adaptive follow-up testing reasoning extension, a “curveball” scenario requiring on-the-spot application, and big-picture synthesis demonstrating integrated understanding in the context of the scenario. Our observation was that these high-level questions really surfaced deep understanding.

To build confidence in IOA preparation, we pre-released the case scenarios and provided some high-focus topics for the IOA. These pre-released topics gave students a sense of direction and assurance while preparing for the IOA, but since the on-day allocation of scenarios with questions asked dynamically adapted to each conversation, it required real-time thinking and discouraged scripted responses.

The rubric assessed five dimensions: microbiology understanding, immunology understanding, application and analysis, breadth and depth of reasoning (curveball and synthesis questions), and communication. Students received the rubric in advance for transparency.

Why this works for STEM

While quizzes test recognition, conversations reveal whether students can explain and apply ideas with genuine depth of understanding. The IOA functions as both assessment and learning experience. Students told us that talking through concepts helped them remember material even months later.

Graduates in microbiology, immunology, and health research need independent critical thinking and the ability to articulate their reasoning. This includes explaining protocols to team members, discussing results with colleagues, or troubleshooting unexpected findings with a research partner. IOAs develop these essential skills that written exams alone don’t fully capture.

We also encouraged AI use in preparation and developed a voice-enabled practice bot containing the same scenarios as the real IOA. This positioned AI as a study tool while the IOA remained the secure assessment point where understanding was demonstrated live, which aligns with TEQSA’s 2023 authentic AI engagement and assessment security.

Scaffolding: what made it work

We were critically aware of the importance of scaffolding, especially since oral assessment was relatively new at second-year level. Drawing on foundational work on structured learning support (Wood, Bruner, & Ross, 1976) and the principle of constructive alignment, which ensures our teaching, practice activities, and assessment all target the same outcomes (Biggs & Tang, 2011), we implemented layered support well before the assessment.

Below is a list of materials we developed to prepare students for the IOA:

- Clear written guidance explained timing, structure, and practical tips: i.e. pausing to think is acceptable, clarification can be requested. We included a detailed list of high-priority topics with the disclaimer that all lecture content is assessable, and explicit reassurance that accents and grammar weren’t being graded. The rubric prioritised content and structure, not performance style.

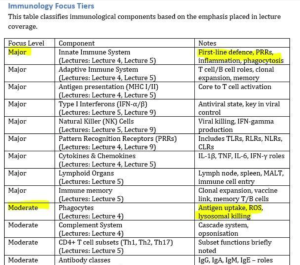

Figure 1: Study Guide Excerpt – a tiered list of concepts designed to guide students’ revision efforts (click image to expand). - A mock IOA video showed conversational flow, strong and weaker responses, assessor prompts, and strategies for handling curveballs calmly. This made expectations concrete and taught us how much realistically fits in 10 minutes!

- Peer practice workshops used tutorial time for structured practice. Students rotated through roles as student, assessor, and observer using sample scenarios and the real rubric. This normalised thinking aloud and helped students learn from observing others.

- Assessor practice sessions in the final tutorials, we made time to let students practise with future assessors, giving us a helpful performance baseline.

- A voice-enabled AI practice bot on the university’s secure platform (Cogniti) simulated the assessor role. Students practised explaining concepts aloud, received follow-up prompts, and got rubric-aligned guidance. The Cogniti bot was explicitly optional, students had complete autonomy to use it or not. We have heard from many who reported that it helped identify gaps in content understanding and get a realistic feel of an oral assessment.

Scaling to 400+ students

This is what we get asked MOST: How do you scale? Is it costly?

First and foremost – organisation helps enormously! Second, consider that marking a 3,000-word report typically takes 15+ minutes. A 10-minute conversation is roughly equivalent to a 2000-3000 essay, and we found we preferred the engaging dialogue. This isn’t to say IOAs are better than reports; they serve different purposes. For an experienced assessment team, the time investment can be quite comparable.

Key factors to consider:

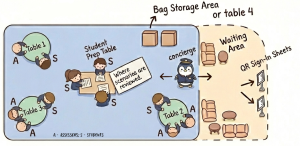

- The venue setup: We used one large room with 3-4 conversation stations spaced to prevent overhearing. A central concierge managed student flow and administrative issues. Sessions ran 4-5 hours daily across 7 days over 2 weeks. All sessions were recorded for moderation.

- Assessor training and calibration: Assessors included academic staff, PhD students, and postdoctoral fellows. Calibration involved rubric walkthroughs, joint review of sample recordings, live practice IOAs, and daily debriefs. We ran mid-assessment calibration and drift analysis (are we becoming stricter or more lenient as we mark?) to ensure consistent marking.

Figure 3. Venue set up for a centralised assessment workflow, managed by a concierge for 3-4 conversations at once (click image to expand). - Live marking: Assessors marked in real time using SRES with colour-coded keyword shortcuts, reducing cognitive load. Separate comment boxes captured internal observations and external student feedback for quality assurance.

- Moderation: We used a custom R and Quarto workflow to examine score distributions, identify outliers, and review flagged cases. We’re happy to share this workflow upon request.

- Environment matters: Quiet, appropriately sized rooms are essential! We ran into an issue where one space was too small, and the conversations distracted both students and assessors.

What we learned from students and assessors

In informal feedback, many students described the IOA format as pushing them to study differently and more thoroughly than they would have for traditional assessments. We received emails from students thanking us for an assessment that made them genuinely learn the material rather than just memorise it. Several told us that talking through concepts helped ideas stick, that they could still recall the reasoning months later because explaining it aloud had made it real. Others found it stressful; this reminds us how essential the scaffolding efforts were. Students also questioned whether ten minutes could be fair, but in practice, we have found the IOA performance to track closely with the secured final exam.

Our teaching team found the experience equally valuable. Assessors noted that the format was highly engaging and allowed them to probe the depth of understanding in ways that brought out each student’s strengths or gaps in knowledge (Nic Gracie, Dr Lauren Stern, Dr Iris Cheng). The adaptable questioning mirrored real problem-solving scenarios in microbiology and diagnostic settings. Several assessors remarked on the joy of watching concepts click in real time, especially when nervous students relaxed into genuine discussion about the content (Mirei Okada, Dr Rachel Pinto). Being familiar with the rubric beforehand meant assessors could focus entirely on listening, and students responded better when they felt truly heard.

Would we recommend IOAs? Yes, with lessons learned

First-time implementation was not easy. It demanded significant preparation, training, and infrastructure, and requires thoughtful accommodations for students who find oral assessment particularly challenging.

Based on feedback, we will be running things slightly differently next year. Some adjustments we are considering were: allowing brief note-making before speaking to reduce anxiety; prioritising large, quiet rooms (small spaces created distracting acoustics); using consistent opening scripts to prevent mix-ups and confusions; and front-loading practice opportunities with assessors rather than concentrating them right before assessment.

Despite the initial hard work required, this has been an incredibly fulfilling experience for us as educators. We gained a genuine window into students’ thinking, reasoning, and meaningful application of knowledge, and this gave us confident assurance of their learning. For us, a 10-minute conversation turned out to be one of the best ways to see that in action.

We’re happy to share scenario banks, rubrics, SRES configurations, and moderation workflows.